Maximize the Power of dbt and Snowflake to Achieve Efficient and Scalable Data Vault Solutions

phData

AUGUST 10, 2023

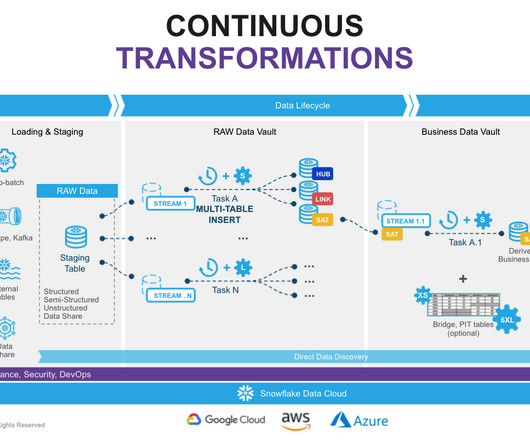

The implementation of a data vault architecture requires the integration of multiple technologies to effectively support the design principles and meet the organization’s requirements. Data Acquisition: Extracting data from source systems and making it accessible. Implement Data Lineage and Traceability Path: Data Vault 2.0

Let's personalize your content