How to Build ETL Data Pipeline in ML

The MLOps Blog

MAY 17, 2023

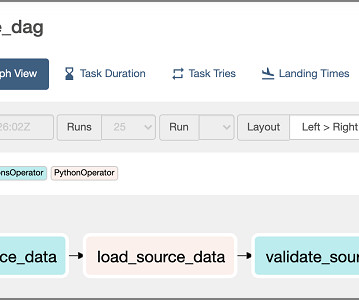

We also discuss different types of ETL pipelines for ML use cases and provide real-world examples of their use to help data engineers choose the right one. What is an ETL data pipeline in ML? Xoriant It is common to use ETL data pipeline and data pipeline interchangeably.

Let's personalize your content