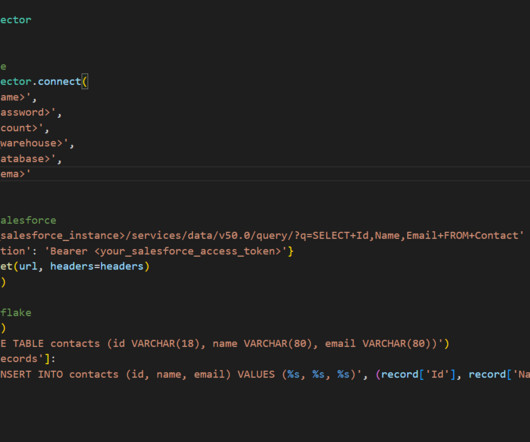

The power of remote engine execution for ETL/ELT data pipelines

IBM Journey to AI blog

MAY 15, 2024

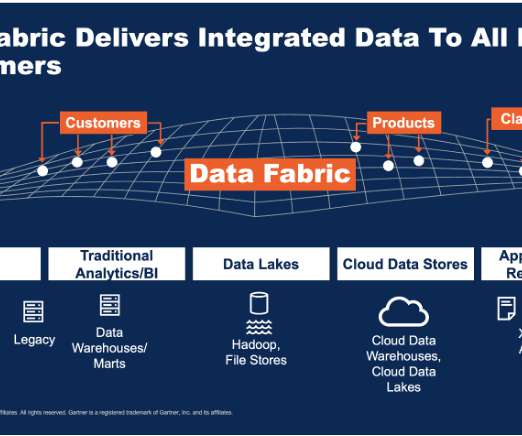

According to International Data Corporation (IDC), stored data is set to increase by 250% by 2025 , with data rapidly propagating on-premises and across clouds, applications and locations with compromised quality. This situation will exacerbate data silos, increase costs and complicate the governance of AI and data workloads.

Let's personalize your content