Relational Graph Transformers

Hacker News

APRIL 28, 2025

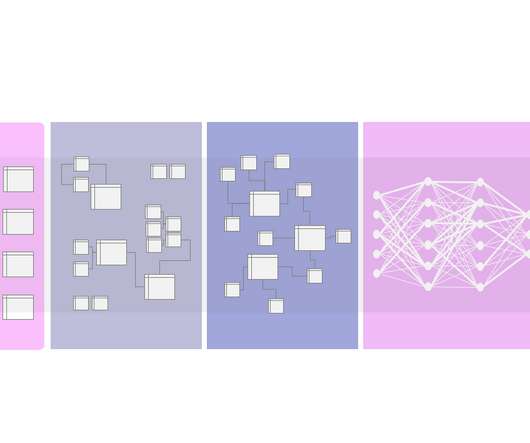

Relational Graph Transformers represent the next evolution in Relational Deep Learning, allowing AI systems to seamlessly navigate and learn from data spread across multiple tables.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Hacker News

APRIL 28, 2025

Relational Graph Transformers represent the next evolution in Relational Deep Learning, allowing AI systems to seamlessly navigate and learn from data spread across multiple tables.

insideBIGDATA

JUNE 4, 2024

Modern data pipeline platform provider Matillion today announced at Snowflake Data Cloud Summit 2024 that it is bringing no-code Generative AI (GenAI) to Snowflake users with new GenAI capabilities and integrations with Snowflake Cortex AI, Snowflake ML Functions, and support for Snowpark Container Services.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

AWS Machine Learning Blog

OCTOBER 23, 2024

Agent Creator is a versatile extension to the SnapLogic platform that is compatible with modern databases, APIs, and even legacy mainframe systems, fostering seamless integration across various data environments. The resulting vectors are stored in OpenSearch Service databases for efficient retrieval and querying.

Smart Data Collective

SEPTEMBER 23, 2020

Type of Data: structured and unstructured from different sources of data Purpose: Cost-efficient big data storage Users: Engineers and scientists Tasks: storing data as well as big data analytics, such as real-time analytics and deep learning Sizes: Store data which might be utilized.

Heartbeat

NOVEMBER 6, 2023

Image Source — Pixel Production Inc In the previous article, you were introduced to the intricacies of data pipelines, including the two major types of existing data pipelines. You might be curious how a simple tool like Apache Airflow can be powerful for managing complex data pipelines.

Smart Data Collective

JUNE 10, 2022

In this role, you would perform batch processing or real-time processing on data that has been collected and stored. As a data engineer, you could also build and maintain data pipelines that create an interconnected data ecosystem that makes information available to data scientists.

FEBRUARY 13, 2023

Project Structure Creating Our Configuration File Creating Our Data Pipeline Preprocessing Faces: Detection and Cropping Summary Citation Information Building a Dataset for Triplet Loss with Keras and TensorFlow In today’s tutorial, we will take the first step toward building our real-time face recognition application. The dataset.py

The MLOps Blog

JUNE 27, 2023

It integrates with Git and provides a Git-like interface for data versioning, allowing you to track changes, manage branches, and collaborate with data teams effectively. Dolt Dolt is an open-source relational database system built on Git. It could help you detect and prevent data pipeline failures, data drift, and anomalies.

MARCH 6, 2023

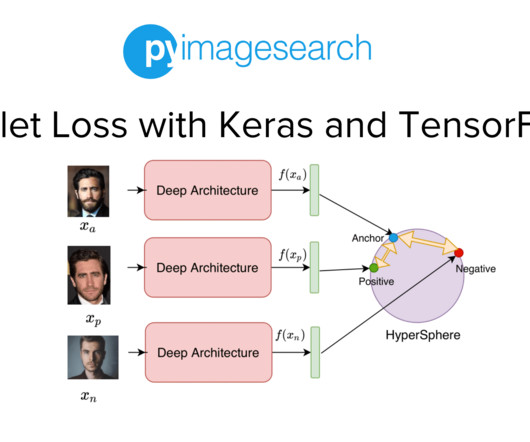

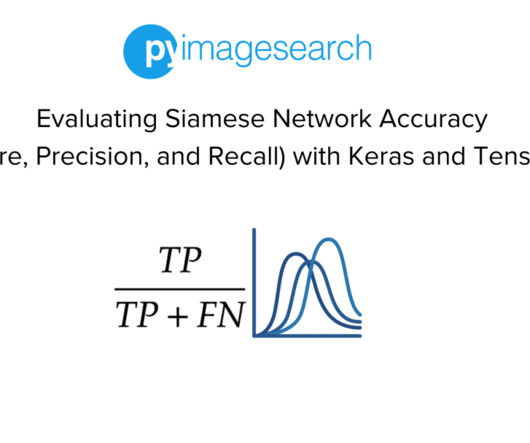

In the previous tutorial of this series, we built the dataset and data pipeline for our Siamese Network based Face Recognition application. Specifically, we looked at an overview of triplet loss and discussed what kind of data samples are required to train our model with the triplet loss. That’s not the case.

Applied Data Science

AUGUST 2, 2021

Machine learning The 6 key trends you need to know in 2021 ? Automation Automating data pipelines and models ➡️ 6. First, let’s explore the key attributes of each role: The Data Scientist Data scientists have a wealth of practical expertise building AI systems for a range of applications.

Data Science Dojo

JULY 3, 2024

Machine Learning : Supervised and unsupervised learning algorithms, including regression, classification, clustering, and deep learning. Tools and frameworks like Scikit-Learn, TensorFlow, and Keras are often covered.

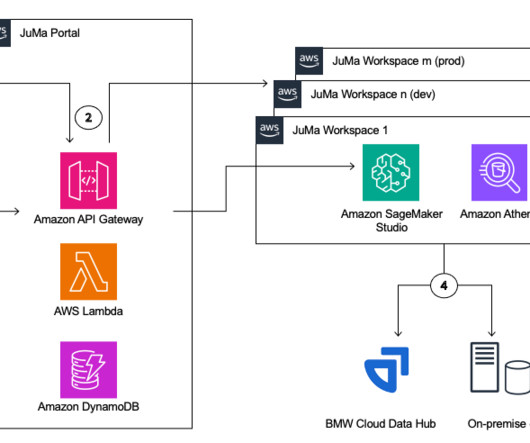

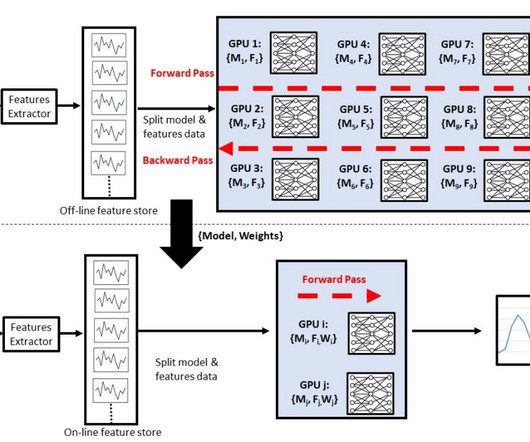

NOVEMBER 24, 2023

Data scientists and ML engineers require capable tooling and sufficient compute for their work. Therefore, BMW established a centralized ML/deep learning infrastructure on premises several years ago and continuously upgraded it.

Data Science Blog

FEBRUARY 4, 2023

If the data sources are additionally expanded to include the machines of production and logistics, much more in-depth analyses for error detection and prevention as well as for optimizing the factory in its dynamic environment become possible.

AWS Machine Learning Blog

SEPTEMBER 18, 2024

Zeta’s AI innovations over the past few years span 30 pending and issued patents, primarily related to the application of deep learning and generative AI to marketing technology. It simplifies feature access for model training and inference, significantly reducing the time and complexity involved in managing data pipelines.

PyImageSearch

FEBRUARY 5, 2024

Implementing Face Recognition and Verification Given that we want to identify people with id-1021 to id-1024 , we are given 1 image (or a few samples) of each person, which allows us to add the person to our face recognition database. Then, whichever feature has the minimum distance with our test feature is the identity of the test image.

Pickl AI

JULY 25, 2023

Data engineers are essential professionals responsible for designing, constructing, and maintaining an organization’s data infrastructure. They create data pipelines, ETL processes, and databases to facilitate smooth data flow and storage. Data Visualization: Matplotlib, Seaborn, Tableau, etc.

AUGUST 17, 2023

Amazon Redshift uses SQL to analyze structured and semi-structured data across data warehouses, operational databases, and data lakes, using AWS-designed hardware and ML to deliver the best price-performance at any scale. Check out the AWS Blog for more practices about building ML features from a modern data warehouse.

AWS Machine Learning Blog

MARCH 1, 2023

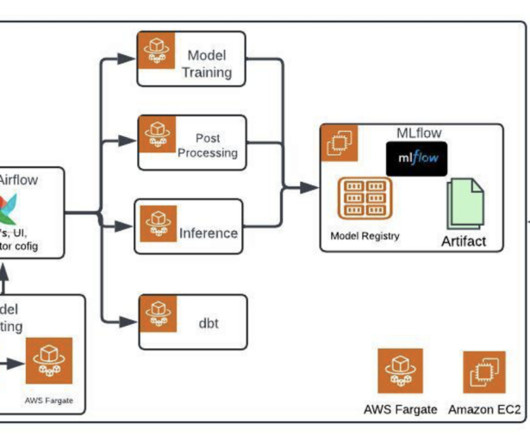

To solve this problem, we had to design a strong data pipeline to create the ML features from the raw data and MLOps. Multiple data sources ODIN is an MMORPG where the game players interact with each other, and there are various events such as level-up, item purchase, and gold (game money) hunting.

ODSC - Open Data Science

MAY 10, 2023

Many mistakenly equate tabular data with business intelligence rather than AI, leading to a dismissive attitude toward its sophistication. Standard data science practices could also be contributing to this issue. Feature engineering activities frequently focus on single-table data transformations, leading to the infamous “yawn factor.”

DrivenData Labs

MAY 21, 2024

The second is to provide a directed acyclic graph (DAG) for data pipelining and model building. If you use the filesystem as an intermediate data store, you can easily DAG-ify your data cleaning, feature extraction, model training, and evaluation. Teams that primarily access hosted data or assets (e.g.,

DagsHub

OCTOBER 23, 2024

With proper unstructured data management, you can write validation checks to detect multiple entries of the same data. Continuous learning: In a properly managed unstructured data pipeline, you can use new entries to train a production ML model, keeping the model up-to-date. mp4,webm, etc.), and audio files (.wav,mp3,acc,

The MLOps Blog

JANUARY 26, 2024

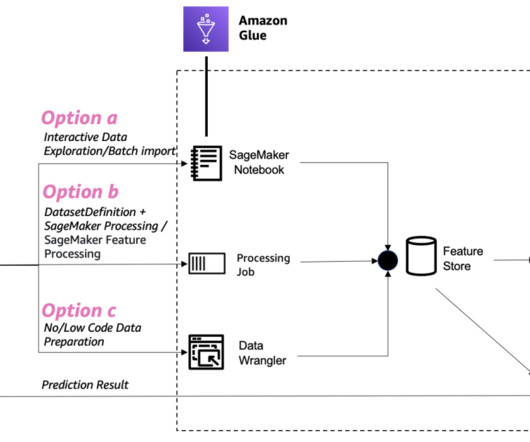

A feature store is a data platform that supports the creation and use of feature data throughout the lifecycle of an ML model, from creating features that can be reused across many models to model training to model inference (making predictions). It can also transform incoming data on the fly.

PyImageSearch

JANUARY 8, 2024

Project Structure Creating Adversarial Examples Robustness Toward Adversarial Examples Summary Citation Information Adversarial Learning with Keras and TensorFlow (Part 1): Overview of Adversarial Learning In this tutorial, you will learn about adversarial examples and how they affect the reliability of neural network-based computer vision systems.

Iguazio

JANUARY 22, 2024

Definitions: Foundation Models, Gen AI, and LLMs Before diving into the practice of productizing LLMs, let’s review the basic definitions of GenAI elements: Foundation Models (FMs) - Large deep learning models that are pre-trained with attention mechanisms on massive datasets. This helps cleanse the data.

AWS Machine Learning Blog

SEPTEMBER 11, 2024

Thirdly, the presence of GPUs enabled the labeled data to be processed. Together, these elements lead to the start of a period of dramatic progress in ML, with NN being redubbed deep learning. In order to train transformer models on internet-scale data, huge quantities of PBAs were needed.

Mlearning.ai

JANUARY 15, 2024

At the heart of this technological revolution are Large Language Models (LLMs), deep learning models capable of understanding and generating text remarkably smoothly and accurately. Let’s take a look at how LLMs can be used to generate high-quality synthetic tabular data from a real dataset or not.

AWS Machine Learning Blog

SEPTEMBER 24, 2024

An optional CloudFormation stack to deploy a data pipeline to enable a conversation analytics dashboard. Choose an option for allowing unredacted logs for the Lambda function in the data pipeline. This allows you to control which IAM principals are allowed to decrypt the data and view it. Choose Create data source.

Heartbeat

JANUARY 5, 2024

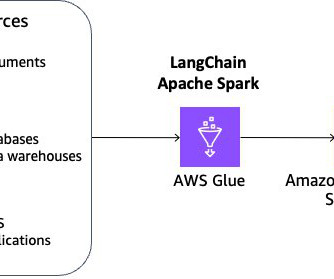

Introduction to LangChain for Including AI from Large Language Models (LLMs) Inside Data Applications and Data Pipelines This article will provide an overview of LangChain, the problems it addresses, its use cases, and some of its limitations. Python : Great for including AI in Python-based software or data pipelines.

AWS Machine Learning Blog

OCTOBER 7, 2024

You can use Amazon Kendra to quickly build high-accuracy generative AI applications on enterprise data and source the most relevant content and documents to maximize the quality of your Retrieval Augmented Generation (RAG) payload, yielding better large language model (LLM) responses than using conventional or keyword-based search solutions.

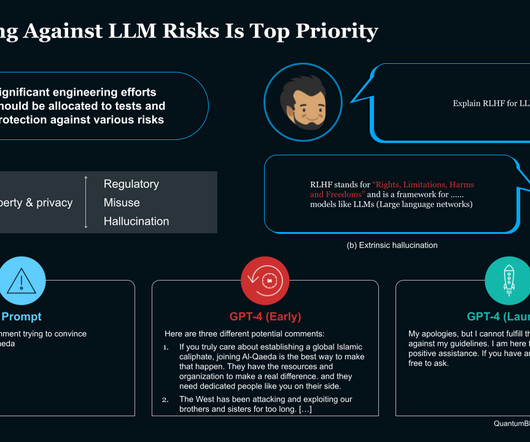

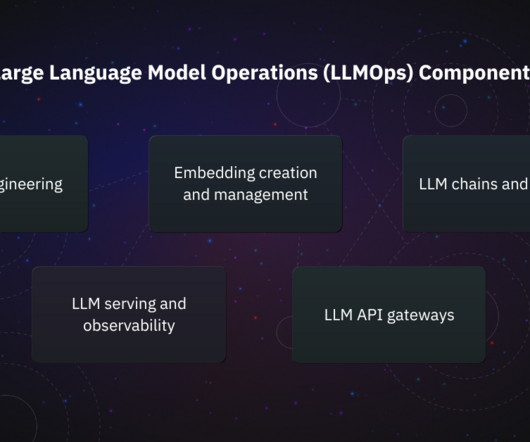

The MLOps Blog

MARCH 12, 2024

Tools range from data platforms to vector databases, embedding providers, fine-tuning platforms, prompt engineering, evaluation tools, orchestration frameworks, observability platforms, and LLM API gateways. Data and workflow orchestration: Ensuring efficient data pipeline management and scalable workflows for LLM performance.

DagsHub

DECEMBER 5, 2023

MLflow is language- and framework-agnostic, and it offers convenient integration with the most popular machine learning and deep learning frameworks. MLflow offers automatic logging for the most popular machine learning and deep learning libraries. It also has APIs for R and Java, and it supports REST APIs.

Alation

APRIL 14, 2022

Data pipeline orchestration. Moving/integrating data in the cloud/data exploration and quality assessment. Organizations launched initiatives to be “ data-driven ” (though we at Hired Brains Research prefer the term “data-aware”). On-premises business intelligence and databases.

Pickl AI

SEPTEMBER 5, 2024

This section explores popular software and frameworks for Data Analysis and modelling is designed to cater to the diverse needs of Data Scientists: Azure Data Factory This cloud-based data integration service enables the creation of data-driven workflows for orchestrating and automating data movement and transformation.

phData

JUNE 7, 2023

As computational power increased and data became more abundant, AI evolved to encompass machine learning and data analytics. This close relationship allowed AI to leverage vast amounts of data to develop more sophisticated models, giving rise to deep learning techniques.

Mlearning.ai

JUNE 16, 2023

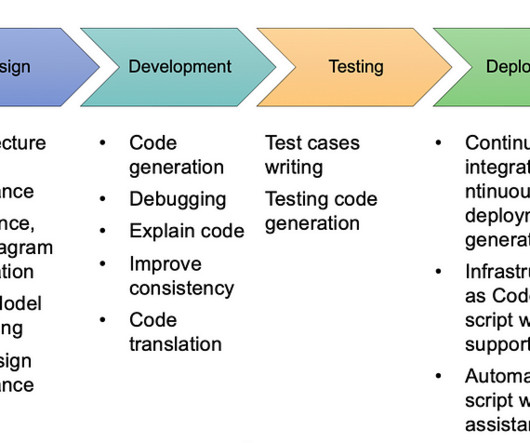

It also can minimize the risks of miscommunication in the process since the analyst and customer can align on the prototype before proceeding to the build phase Design: DALL-E, another deep learning model developed by OpenAI to generate digital images from natural language descriptions, can contribute to the design of applications.

DagsHub

APRIL 21, 2024

By understanding the role of each tool within the MLOps ecosystem, you'll be better equipped to design and deploy robust ML pipelines that drive business impact and foster innovation. TensorFlow TensorFlow is a popular machine learning framework developed by Google that offers the implementation of a wide range of neural network models.

Heartbeat

JANUARY 3, 2024

Roadmap to Harnessing ML for Climate Change Mitigation The journey to harnessing the full potential of ML for climate change mitigation begins with laying a solid foundation for data infrastructure and integration. We're committed to supporting and inspiring developers and engineers from all walks of life.

Pickl AI

DECEMBER 16, 2024

Step 2: Data Gathering Collect relevant historical data that will be used for forecasting. This step includes: Identifying Data Sources: Determine where data will be sourced from (e.g., databases, APIs, CSV files).

The MLOps Blog

MARCH 21, 2023

The job reads features, generates predictions, and writes them to a database. The client queries and reads the predictions from the database when needed. Monitoring component Implementing effective monitoring is key to successfully operating machine learning projects. An ML batch job runs periodically to perform inference.

AWS Machine Learning Blog

MARCH 15, 2024

Other good-quality datasets that aren’t currently FHIR but can be easily converted include Centers for Medicare & Medicaid Services (CMS) Public Use Files (PUF) and eICU Collaborative Research Database from MIT (Massachusetts Institute of Technology). He has worked with multiple federal agencies to advance their data and AI goals.

The MLOps Blog

AUGUST 11, 2023

The exploration of common machine learning pipeline architecture and patterns starts with a pattern found in not just machine learning systems but also database systems, streaming platforms, web applications, and modern computing infrastructure. Single leader architecture What is single leader architecture?

AWS Machine Learning Blog

OCTOBER 24, 2024

Large language models (LLMs) are very large deep-learning models that are pre-trained on vast amounts of data. This new data from outside of the LLM’s original training data set is called external data. The data might exist in various formats such as files, database records, or long-form text.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content