How I Redesigned over 100 ETL into ELT Data Pipelines

KDnuggets

NOVEMBER 15, 2021

Learn how to level up your Data Pipelines!

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

KDnuggets

NOVEMBER 15, 2021

Learn how to level up your Data Pipelines!

Data Science Blog

MAY 20, 2024

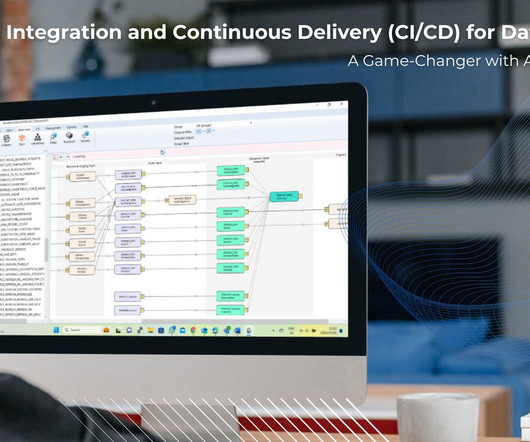

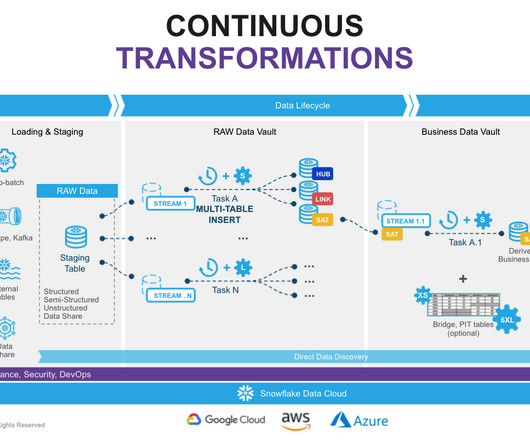

Continuous Integration and Continuous Delivery (CI/CD) for Data Pipelines: It is a Game-Changer with AnalyticsCreator! The need for efficient and reliable data pipelines is paramount in data science and data engineering. They transform data into a consistent format for users to consume.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

NOVEMBER 27, 2024

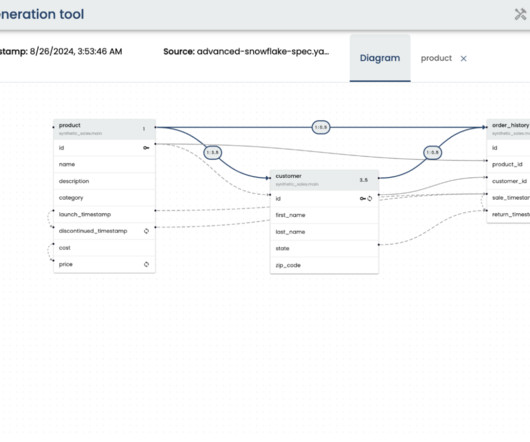

While customers can perform some basic analysis within their operational or transactional databases, many still need to build custom data pipelines that use batch or streaming jobs to extract, transform, and load (ETL) data into their data warehouse for more comprehensive analysis. Create dbt models in dbt Cloud.

KDnuggets

NOVEMBER 15, 2021

Learn how to level up your Data Pipelines!

IBM Data Science in Practice

JANUARY 13, 2025

By Santhosh Kumar Neerumalla , Niels Korschinsky & Christian Hoeboer Introduction This blogpost describes how to manage and orchestrate high volume Extract-Transform-Load (ETL) loads using a serverless process based on Code Engine. The source data is unstructured JSON, while the target is a structured, relational database.

Smart Data Collective

SEPTEMBER 8, 2021

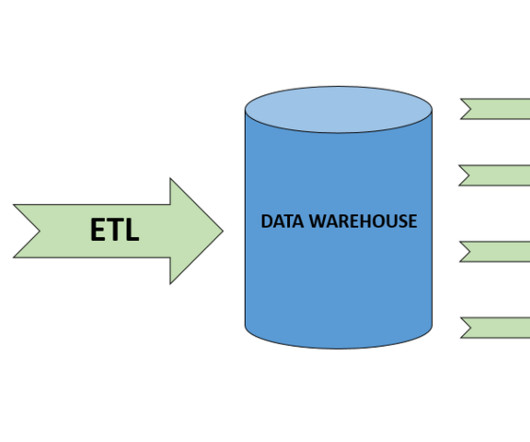

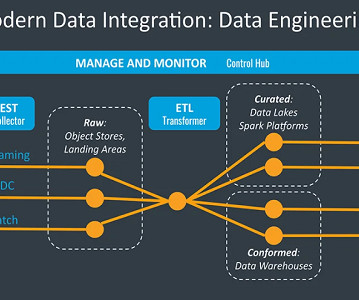

The ETL process is defined as the movement of data from its source to destination storage (typically a Data Warehouse) for future use in reports and analyzes. The data is initially extracted from a vast array of sources before transforming and converting it to a specific format based on business requirements.

IBM Journey to AI blog

MAY 15, 2024

Two of the more popular methods, extract, transform, load (ETL ) and extract, load, transform (ELT) , are both highly performant and scalable. Data engineers build data pipelines, which are called data integration tasks or jobs, as incremental steps to perform data operations and orchestrate these data pipelines in an overall workflow.

Analytics Vidhya

FEBRUARY 20, 2023

Introduction Azure data factory (ADF) is a cloud-based data ingestion and ETL (Extract, Transform, Load) tool. The data-driven workflow in ADF orchestrates and automates data movement and data transformation.

Data Science Dojo

JULY 6, 2023

Data engineering tools are software applications or frameworks specifically designed to facilitate the process of managing, processing, and transforming large volumes of data. Airflow: Apache Airflow is an open-source platform for orchestrating and scheduling data pipelines.

phData

MARCH 14, 2024

In the data analytics processes, choosing the right tools is crucial for ensuring efficiency and scalability. Two popular players in this area are Alteryx Designer and Matillion ETL , both offering strong solutions for handling data workflows with Snowflake Data Cloud integration.

Pickl AI

JULY 8, 2024

Summary: This blog explains how to build efficient data pipelines, detailing each step from data collection to final delivery. Introduction Data pipelines play a pivotal role in modern data architecture by seamlessly transporting and transforming raw data into valuable insights.

Pickl AI

OCTOBER 17, 2024

Summary: The ETL process, which consists of data extraction, transformation, and loading, is vital for effective data management. Following best practices and using suitable tools enhances data integrity and quality, supporting informed decision-making. Introduction The ETL process is crucial in modern data management.

Pickl AI

OCTOBER 17, 2024

Summary: This article explores the significance of ETL Data in Data Management. It highlights key components of the ETL process, best practices for efficiency, and future trends like AI integration and real-time processing, ensuring organisations can leverage their data effectively for strategic decision-making.

Pickl AI

JUNE 7, 2024

Summary: Choosing the right ETL tool is crucial for seamless data integration. Top contenders like Apache Airflow and AWS Glue offer unique features, empowering businesses with efficient workflows, high data quality, and informed decision-making capabilities. Choosing the right ETL tool is crucial for smooth data management.

phData

JUNE 14, 2023

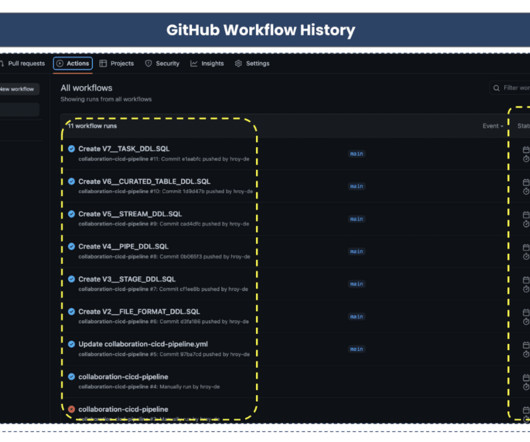

In recent years, data engineering teams working with the Snowflake Data Cloud platform have embraced the continuous integration/continuous delivery (CI/CD) software development process to develop data products and manage ETL/ELT workloads more efficiently.

phData

JULY 12, 2023

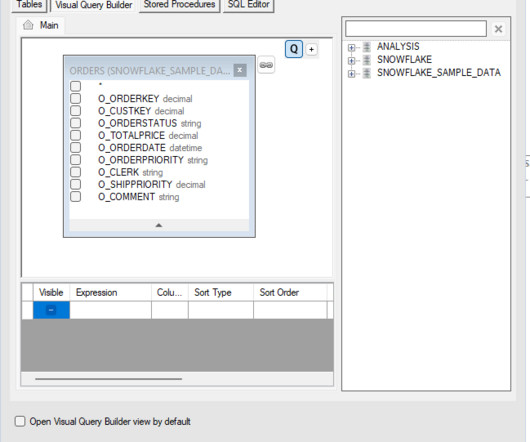

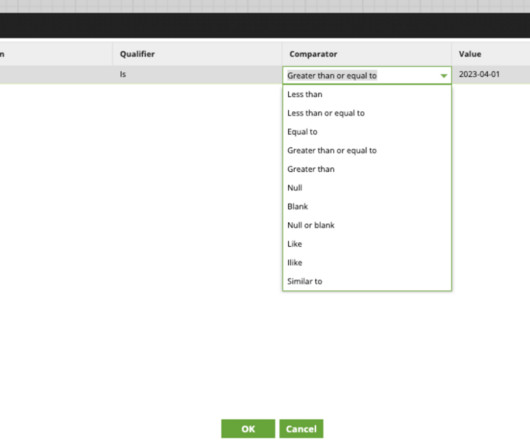

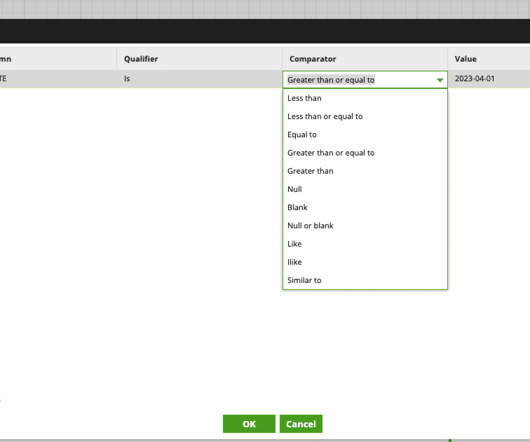

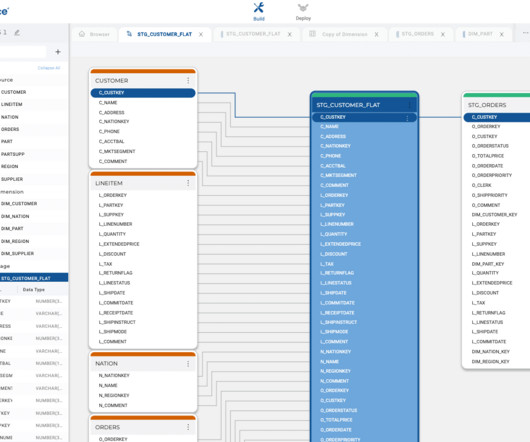

Unlike traditional methods that rely on complex SQL queries for orchestration, Matillion Jobs provides a more streamlined approach. By converting SQL scripts into Matillion Jobs , users can take advantage of the platform’s advanced features for job orchestration, scheduling, and sharing. What is Matillion ETL?

phData

APRIL 21, 2023

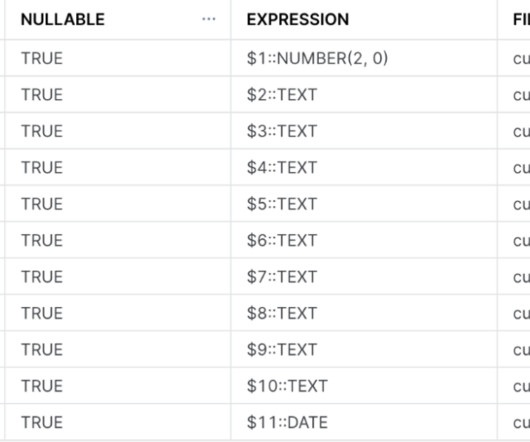

Unlike traditional methods that rely on complex SQL queries for orchestration, Matillion Jobs provide a more streamlined approach. By converting SQL scripts into Matillion Jobs , users can take advantage of the platform’s advanced features for job orchestration, scheduling, and sharing. In our case, this table is “orders.”

Applied Data Science

AUGUST 2, 2021

Automation Automating data pipelines and models ➡️ 6. The most common data science languages are Python and R — SQL is also a must have skill for acquiring and manipulating data. The Data Engineer Not everyone working on a data science project is a data scientist.

Data Science Dojo

JULY 3, 2024

Data Processing and Analysis : Techniques for data cleaning, manipulation, and analysis using libraries such as Pandas and Numpy in Python. Databases and SQL : Managing and querying relational databases using SQL, as well as working with NoSQL databases like MongoDB.

The MLOps Blog

MARCH 15, 2023

In this post, you will learn about the 10 best data pipeline tools, their pros, cons, and pricing. A typical data pipeline involves the following steps or processes through which the data passes before being consumed by a downstream process, such as an ML model training process.

phData

MARCH 8, 2023

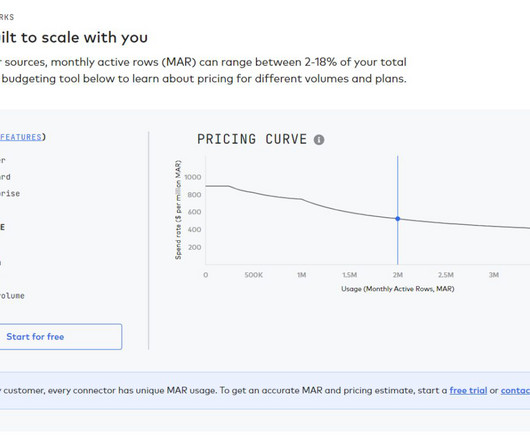

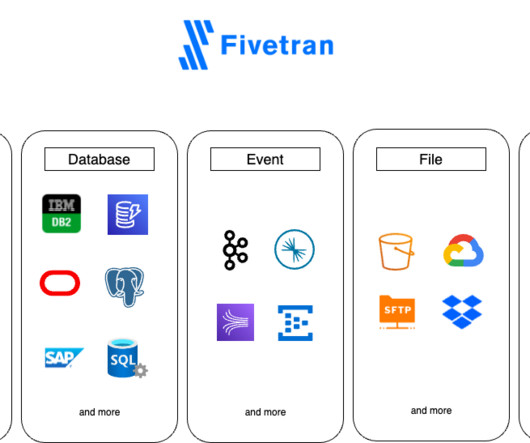

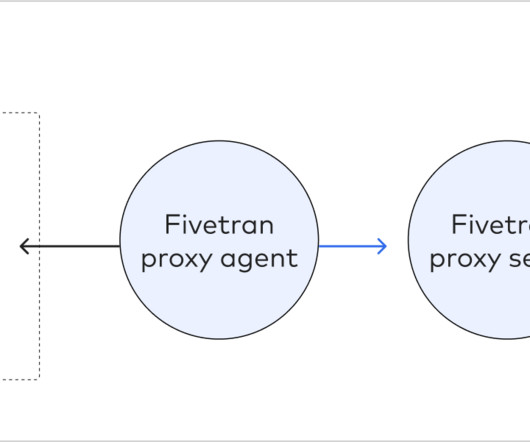

It allows organizations to easily connect their disparate data sources without having to manage any infrastructure. Fivetran’s automated data movement platform simplifies the ETL (extract, transform, load) process by automating most of the time-consuming tasks of ETL that data engineers would typically do.

Pickl AI

JULY 25, 2023

Data engineers are essential professionals responsible for designing, constructing, and maintaining an organization’s data infrastructure. They create data pipelines, ETL processes, and databases to facilitate smooth data flow and storage. Data Visualization: Matplotlib, Seaborn, Tableau, etc.

phData

SEPTEMBER 2, 2024

Best practices are a pivotal part of any software development, and data engineering is no exception. This ensures the data pipelines we create are robust, durable, and secure, providing the desired data to the organization effectively and consistently. What Are Matillion Jobs and Why Do They Matter?

phData

AUGUST 8, 2024

Putting the T for Transformation in ELT (ETL) is essential to any data pipeline. After extracting and loading your data into the Snowflake AI Data Cloud , you may wonder how best to transform it. What are Snowflake’s Best Features for Data Transformation?

Women in Big Data

NOVEMBER 27, 2024

Evaluate integration capabilities with existing data sources and Extract Transform and Load (ETL) tools. Its PostgreSQL foundation ensures compatibility with most SQL clients. Strengths : Real-time analytics, built-in machine learning capabilities, and fast querying with standard SQL.

The MLOps Blog

SEPTEMBER 7, 2023

Data Scientists and ML Engineers typically write lots and lots of code. From writing code for doing exploratory analysis, experimentation code for modeling, ETLs for creating training datasets, Airflow (or similar) code to generate DAGs, REST APIs, streaming jobs, monitoring jobs, etc. Related post MLOps Is an Extension of DevOps.

phData

JULY 17, 2023

In order to fully leverage this vast quantity of collected data, companies need a robust and scalable data infrastructure to manage it. This is where Fivetran and the Modern Data Stack come in. This complexity often requires many hours of work from a large data engineering team to build and manually manage data pipelines.

Pickl AI

NOVEMBER 4, 2024

Effective data governance enhances quality and security throughout the data lifecycle. What is Data Engineering? Data Engineering is designing, constructing, and managing systems that enable data collection, storage, and analysis. ETL is vital for ensuring data quality and integrity.

phData

APRIL 29, 2024

Understanding Fivetran Fivetran is a popular Software-as-a-Service platform that enables users to automate the movement of data and ETL processes across diverse sources to a target destination. For a longer overview, along with insights and best practices, please feel free to jump back to the previous blog.

Pickl AI

JULY 3, 2023

Here are steps you can follow to pursue a career as a BI Developer: Acquire a solid foundation in data and analytics: Start by building a strong understanding of data concepts, relational databases, SQL (Structured Query Language), and data modeling.

ODSC - Open Data Science

JANUARY 18, 2024

This individual is responsible for building and maintaining the infrastructure that stores and processes data; the kinds of data can be diverse, but most commonly it will be structured and unstructured data. They’ll also work with software engineers to ensure that the data infrastructure is scalable and reliable.

phData

AUGUST 10, 2023

That said, dbt provides the ability to generate data vault models and also allows you to write your data transformations using SQL and code-reusable macros powered by Jinja2 to run your data pipelines in a clean and efficient way. The most important reason for using DBT in Data Vault 2.0

phData

OCTOBER 20, 2023

Some of the databases supported by Fivetran are: Snowflake Data Cloud (BETA) MySQL PostgreSQL SAP ERP SQL Server Oracle In this blog, we will review how to pull Data from on-premise Systems using Fivetran to a specific target or destination. Databases often write more information to a transaction log than is required.

Alation

JANUARY 17, 2023

It is known to have benefits in handling data due to its robustness, speed, and scalability. A typical modern data stack consists of the following: A data warehouse. Data ingestion/integration services. Reverse ETL tools. Data orchestration tools. A Note on the Shift from ETL to ELT.

Alation

JUNE 14, 2023

You don’t have to write ETL jobs.” That lowers the barrier to entry because you don’t have to be an ETL developer. Data Pipeline Capabilities This team’s scope is massive because the data pipelines are huge and there are many different capabilities embedded in them. Invest in automation.

phData

MARCH 1, 2024

There’s no need for developers or analysts to manually adjust table schemas or modify ETL (Extract, Transform, Load) processes whenever the source data structure changes. Time Efficiency – The automated schema detection and evolution features contribute to faster data availability.

phData

FEBRUARY 7, 2024

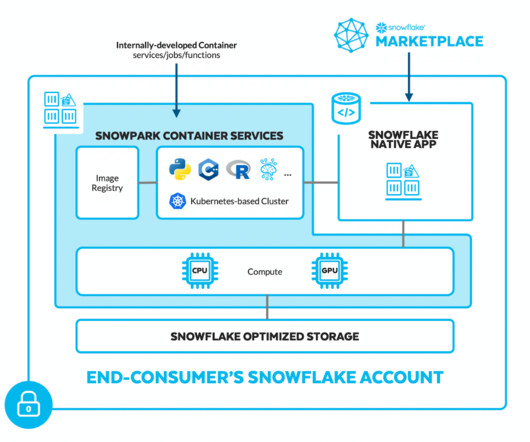

Snowpark is the set of libraries and runtimes in Snowflake that securely deploy and process non-SQL code, including Python, Java, and Scala. A DataFrame is like a query that must be evaluated to retrieve data. An action causes the DataFrame to be evaluated and sends the corresponding SQL statement to the server for execution.

phData

AUGUST 22, 2024

Consider a data pipeline that detects its own failures, diagnoses the issue, and recommends the fix—all automatically. This is the potential of self-healing pipelines, and this blog explores how to implement them using dbt, Snowflake Cortex , and GitHub Actions. The error is related to an unexpected ‘)’ character.

phData

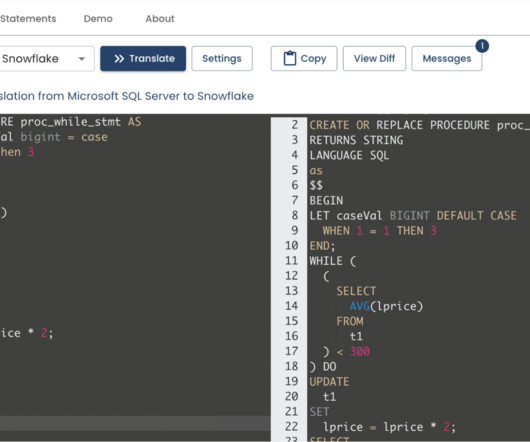

FEBRUARY 5, 2024

The tool converts the templated configuration into a set of SQL commands that are executed against the target Snowflake environment. Replicate can interact with a wide variety of databases, data warehouses, and data lakes (on-premise or based in the cloud). Essentially, it functions like Google Translate — but for SQL dialects.

DataSeries

AUGUST 15, 2024

Data Engineering Career: Unleashing The True Potential of Data Problem-Solving Skills Data Engineers are required to possess strong analytical and problem-solving skills to navigate complex data challenges. Understanding these fundamentals is essential for effective problem-solving in data engineering.

Alation

APRIL 4, 2023

However, the race to the cloud has also created challenges for data users everywhere, including: Cloud migration is expensive, migrating sensitive data is risky, and navigating between on-prem sources is often confusing for users. This empowers users to judge data’s quality and fitness for purpose quickly.

phData

OCTOBER 17, 2024

Apache Airflow Airflow is an open-source ETL software that is very useful when paired with Snowflake. dbt offers a SQL-first transformation workflow that lets teams build data transformation pipelines while following software engineering best practices like CI/CD, modularity, and documentation.

IBM Journey to AI blog

AUGUST 29, 2024

Flink jobs, designed to process continuous data streams, are key to making this possible. How Apache Flink enhances real-time event-driven businesses Imagine a retail company that can instantly adjust its inventory based on real-time sales data pipelines. Apache Flink will work with any Kafka topic, making it consumable for all.

The MLOps Blog

JANUARY 23, 2023

Dolt LakeFS Delta Lake Pachyderm Git-like versioning Database tool Data lake Data pipelines Experiment tracking Integration with cloud platforms Integrations with ML tools Examples of data version control tools in ML DVC Data Version Control DVC is a version control system for data and machine learning teams.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content