Evolution in ETL: How Skipping Transformation Enhances Data Management

KDnuggets

DECEMBER 12, 2023

This article provides an overview of two new data preparation techniques that enable data democratization while minimizing transformation burdens.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

KDnuggets

DECEMBER 12, 2023

This article provides an overview of two new data preparation techniques that enable data democratization while minimizing transformation burdens.

Pickl AI

APRIL 9, 2025

Summary: This guide explores the top list of ETL tools, highlighting their features and use cases. It provides insights into considerations for choosing the right tool, ensuring businesses can optimize their data integration processes for better analytics and decision-making. What is ETL? What are ETL Tools?

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Pickl AI

OCTOBER 17, 2024

Summary: This article explores the significance of ETL Data in Data Management. It highlights key components of the ETL process, best practices for efficiency, and future trends like AI integration and real-time processing, ensuring organisations can leverage their data effectively for strategic decision-making.

Towards AI

JANUARY 28, 2024

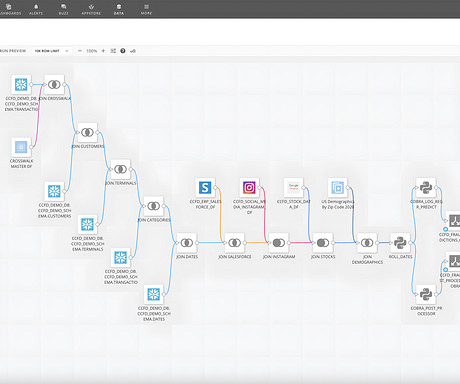

To start, get to know some key terms from the demo: Snowflake: The centralized source of truth for our initial data Magic ETL: Domo’s tool for combining and preparing data tables ERP: A supplemental data source from Salesforce Geographic: A supplemental data source (i.e.,

IBM Data Science in Practice

DECEMBER 7, 2022

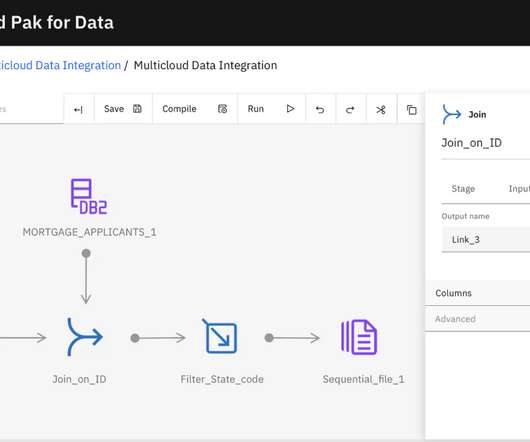

Next Generation DataStage on Cloud Pak for Data Ensuring high-quality data A crucial aspect of downstream consumption is data quality. Studies have shown that 80% of time is spent on data preparation and cleansing, leaving only 20% of time for data analytics. This leaves more time for data analysis.

DataRobot Blog

JANUARY 27, 2019

In my previous articles Predictive Model Data Prep: An Art and Science and Data Prep Essentials for Automated Machine Learning, I shared foundational data preparation tips to help you successfully. by Jen Underwood. Read More.

IBM Data Science in Practice

NOVEMBER 28, 2022

Ensuring high-quality data A crucial aspect of downstream consumption is data quality. Studies have shown that 80% of time is spent on data preparation and cleansing, leaving only 20% of time for data analytics. This leaves more time for data analysis. Let’s use address data as an example.

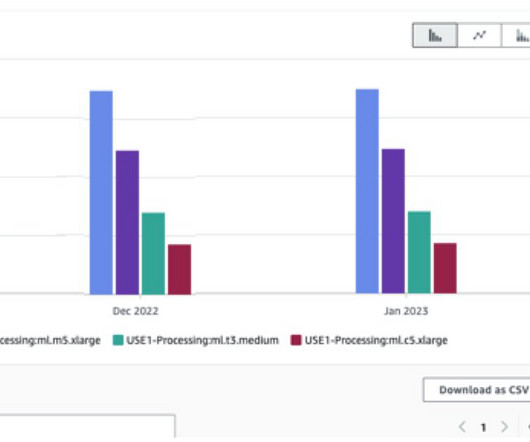

AWS Machine Learning Blog

MARCH 1, 2023

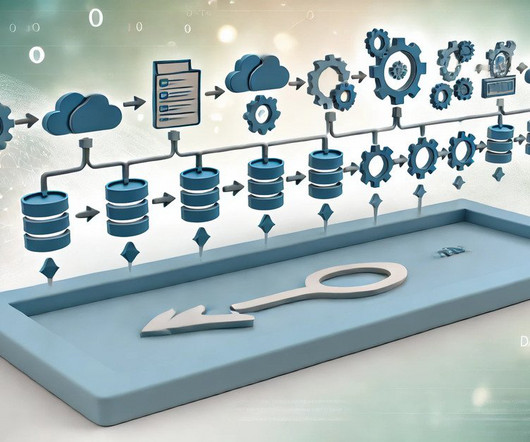

Continuous ML model retraining is one method to overcome this challenge by relearning from the most recent data. This requires not only well-designed features and ML architecture, but also data preparation and ML pipelines that can automate the retraining process. But there is still an engineering challenge.

IBM Journey to AI blog

MARCH 14, 2024

Db2 Warehouse fully supports open formats such as Parquet, Avro, ORC and Iceberg table format to share data and extract new insights across teams without duplication or additional extract, transform, load (ETL). This allows you to scale all analytics and AI workloads across the enterprise with trusted data.

ODSC - Open Data Science

JUNE 12, 2023

Then we have some other ETL processes to constantly land the past 5 years of data into the Datamarts. Then we have some other ETL processes to constantly land the past 5 years of data into the Datamarts. No-code/low-code experience using a diagram view in the data preparation layer similar to Dataflows.

IBM Data Science in Practice

APRIL 9, 2024

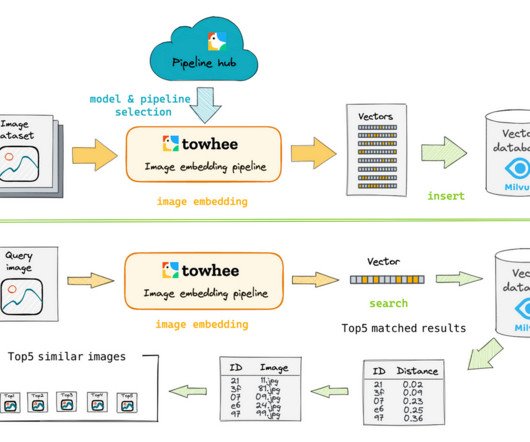

Data Preparation Here we use a subset of the ImageNet dataset (100 classes). You can follow command below to download the data. Towhee is a framework that provides ETL for unstructured data using SoTA machine learning models. It allows to create data processing pipelines.

Dataconomy

JULY 28, 2023

These tools offer a wide range of functionalities to handle complex data preparation tasks efficiently. The tool also employs AI capabilities for automatically providing attribute names and short descriptions for reports, making it easy to use and efficient for data preparation.

Alation

FEBRUARY 20, 2020

Business Intelligence used to require months of effort from BI and ETL teams. More recently, we’ve seen Extract, Transform and Load (ETL) tools like Informatica and IBM Datastage disrupted by self-service data preparation tools. You used to be able to get those standards from your colleague in the BI/ETL team.

Pickl AI

FEBRUARY 4, 2024

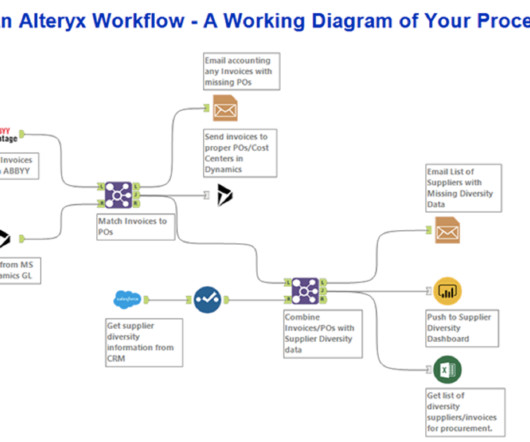

The platform employs an intuitive visual language, Alteryx Designer, streamlining data preparation and analysis. With Alteryx Designer, users can effortlessly input, manipulate, and output data without delving into intricate coding, or with minimal code at most. Is Alteryx an ETL tool? What is Alteryx Designer?

AWS Machine Learning Blog

AUGUST 21, 2023

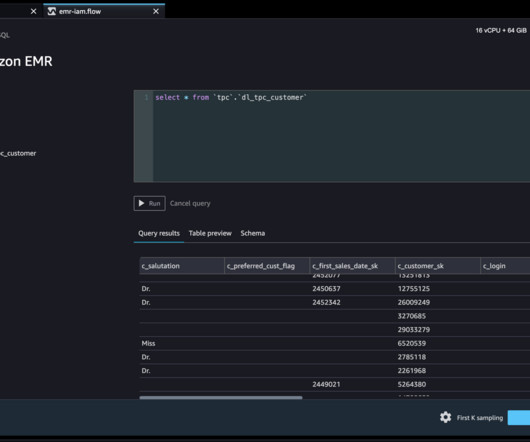

Amazon SageMaker Data Wrangler reduces the time it takes to collect and prepare data for machine learning (ML) from weeks to minutes. We are happy to announce that SageMaker Data Wrangler now supports using Lake Formation with Amazon EMR to provide this fine-grained data access restriction.

DECEMBER 11, 2024

With SageMaker Unified Studio notebooks, you can use Python or Spark to interactively explore and visualize data, prepare data for analytics and ML, and train ML models. With the SQL editor, you can query data lakes, databases, data warehouses, and federated data sources. Big Data Architect.

AWS Machine Learning Blog

JUNE 18, 2024

LLMs excel at writing code and reasoning over text, but tend to not perform as well when interacting directly with time-series data. The output data is transformed to a standardized format and stored in a single location in Amazon S3 in Parquet format, a columnar and efficient storage format.

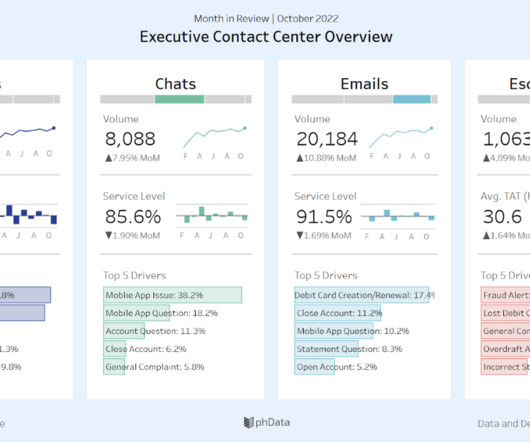

phData

JUNE 26, 2023

While both these tools are powerful on their own, their combined strength offers a comprehensive solution for data analytics. In this blog post, we will show you how to leverage KNIME’s Tableau Integration Extension and discuss the benefits of using KNIME for data preparation before visualization in Tableau.

How to Learn Machine Learning

APRIL 26, 2025

This includes duplicate removal, missing value treatment, variable transformation, and normalization of data. Tools like Python (with pandas and NumPy), R, and ETL platforms like Apache NiFi or Talend are used for data preparation before analysis.

AWS Machine Learning Blog

JANUARY 6, 2023

TR used AWS Glue DataBrew and AWS Batch jobs to perform the extract, transform, and load (ETL) jobs in the ML pipelines, and SageMaker along with Amazon Personalize to tailor the recommendations. TR wanted to take advantage of AWS managed services where possible to simplify operations and reduce undifferentiated heavy lifting.

phData

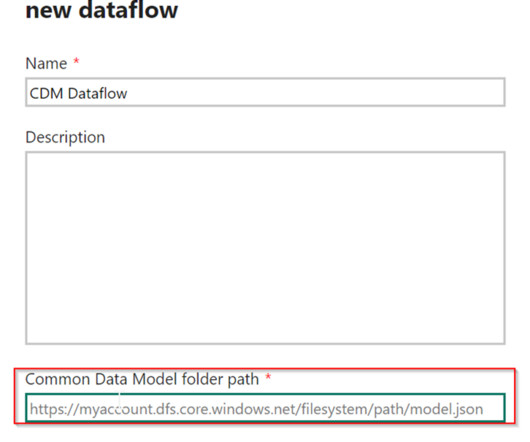

SEPTEMBER 28, 2023

Dataflows represent a cloud-based technology designed for data preparation and transformation purposes. Dataflows have different connectors to retrieve data, including databases, Excel files, APIs, and other similar sources, along with data manipulations that are performed using Online Power Query Editor.

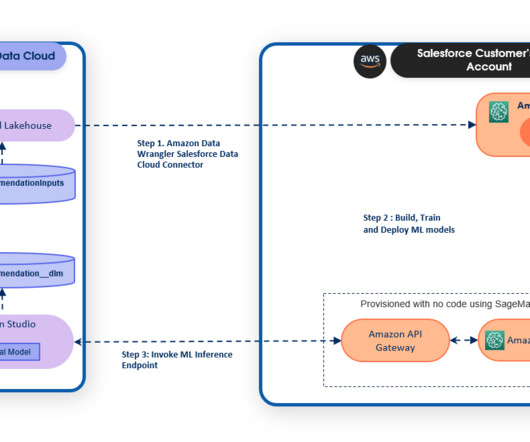

AWS Machine Learning Blog

AUGUST 4, 2023

Benefits of the SageMaker and Data Cloud Einstein Studio integration Here’s how using SageMaker with Einstein Studio in Salesforce Data Cloud can help businesses: It provides the ability to connect custom and generative AI models to Einstein Studio for various use cases, such as lead conversion, case classification, and sentiment analysis.

phData

SEPTEMBER 25, 2024

With the importance of data in various applications, there’s a need for effective solutions to organize, manage, and transfer data between systems with minimal complexity. While numerous ETL tools are available on the market, selecting the right one can be challenging.

Alation

FEBRUARY 20, 2020

Data lakes, while useful in helping you to capture all of your data, are only the first step in extracting the value of that data. We recently announced an integration with Trifacta to seamlessly integrate the Alation Data Catalog with self-service data prep applications to help you solve this issue.

phData

OCTOBER 11, 2023

In this blog, we will focus on integrating Power BI within KNIME for enhanced data analytics. KNIME and Power BI: The Power of Integration The data analytics process invariably involves a crucial phase: data preparation. This phase demands meticulous customization to optimize data for analysis.

phData

FEBRUARY 9, 2023

Before we dive in, it’s important to note that there are multiple ways to migrate data from Redshift tables to Snowflake. One popular route is leveraging third-party ETL tools like Fivetran to ensure a smooth and successful migration. For this blog, we’ll look at how to do this by using the Redshift unload command, Snowpipe, and Spark.

AWS Machine Learning Blog

SEPTEMBER 18, 2024

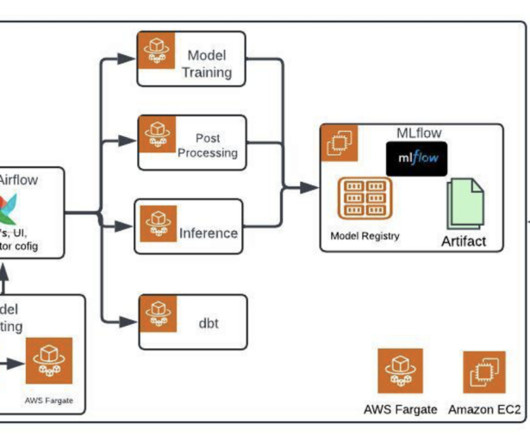

An example direct acyclic graph (DAG) might automate data ingestion, processing, model training, and deployment tasks, ensuring that each step is run in the correct order and at the right time. Though it’s worth mentioning that Airflow isn’t used at runtime as is usual for extract, transform, and load (ETL) tasks.

Alation

FEBRUARY 20, 2020

However, most are only deployed over one data store (Hadoop or other various backends). In 2016, these will increasingly be deployed to query multiple data sources. The implication will be doing away with some (if not all) of the ETL work required to gather all of the data in one data warehouse.

AWS Machine Learning Blog

SEPTEMBER 1, 2023

These teams are as follows: Advanced analytics team (data lake and data mesh) – Data engineers are responsible for preparing and ingesting data from multiple sources, building ETL (extract, transform, and load) pipelines to curate and catalog the data, and prepare the necessary historical data for the ML use cases.

Pickl AI

OCTOBER 10, 2024

It integrates well with cloud services, databases, and big data platforms like Hadoop, making it suitable for various data environments. Typical use cases include ETL (Extract, Transform, Load) tasks, data quality enhancement, and data governance across various industries.

AWS Machine Learning Blog

NOVEMBER 29, 2023

For instance, a notebook that monitors for model data drift should have a pre-step that allows extract, transform, and load (ETL) and processing of new data and a post-step of model refresh and training in case a significant drift is noticed.

Pickl AI

NOVEMBER 4, 2024

It enables reporting and Data Analysis and provides a historical data record that can be used for decision-making. Key components of data warehousing include: ETL Processes: ETL stands for Extract, Transform, Load. ETL is vital for ensuring data quality and integrity.

IBM Journey to AI blog

JULY 17, 2023

Visual modeling: Delivers easy-to-use workflows for data scientists to build data preparation and predictive machine learning pipelines that include text analytics, visualizations and a variety of modeling methods.

AWS Machine Learning Blog

MAY 30, 2023

However, preparing raw data for ML training and evaluation is often a tedious and demanding task in terms of compute resources, time, and human effort. Data preparation commonly needs to be integrated from different sources and deal with missing or noisy values, outliers, and so on.

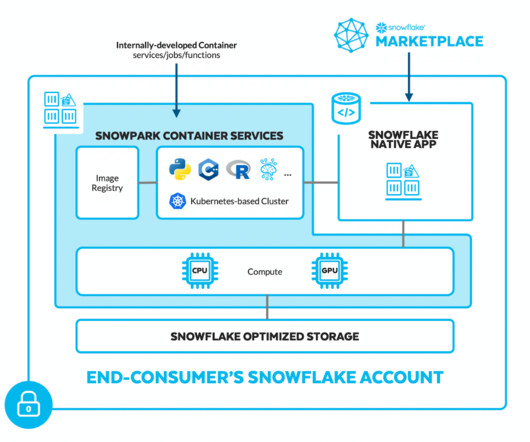

phData

FEBRUARY 7, 2024

Snowpark Use Cases Data Science Streamlining data preparation and pre-processing: Snowpark’s Python, Java, and Scala libraries allow data scientists to use familiar tools for wrangling and cleaning data directly within Snowflake, eliminating the need for separate ETL pipelines and reducing context switching.

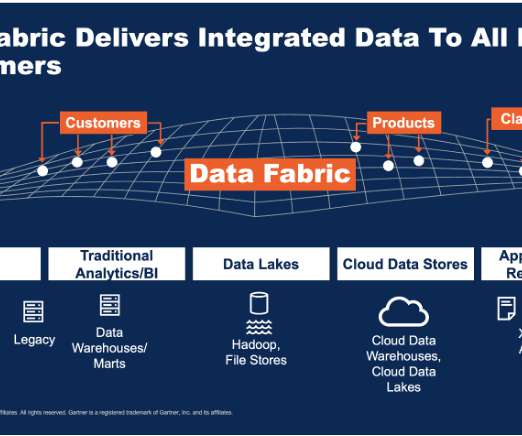

Pickl AI

DECEMBER 10, 2024

A unified data fabric also enhances data security by enabling centralised governance and compliance management across all platforms. Automated Data Integration and ETL Tools The rise of no-code and low-code tools is transforming data integration and Extract, Transform, and Load (ETL) processes.

Pickl AI

SEPTEMBER 10, 2024

Power Query Power Query is another transformative AI tool that simplifies data extraction, transformation, and loading ( ETL ). This feature allows users to connect to various data sources, clean and transform data, and load it into Excel with minimal effort.

AWS Machine Learning Blog

APRIL 16, 2024

These connections are used by AWS Glue crawlers, jobs, and development endpoints to access various types of data stores. You can use these connections for both source and target data, and even reuse the same connection across multiple crawlers or extract, transform, and load (ETL) jobs.

The MLOps Blog

OCTOBER 20, 2023

Placing functions for plotting, data loading, data preparation, and implementations of evaluation metrics in plain Python modules keeps a Jupyter notebook focused on the exploratory analysis | Source: Author Using SQL directly in Jupyter cells There are some cases in which data is not in memory (e.g.,

The MLOps Blog

MAY 31, 2023

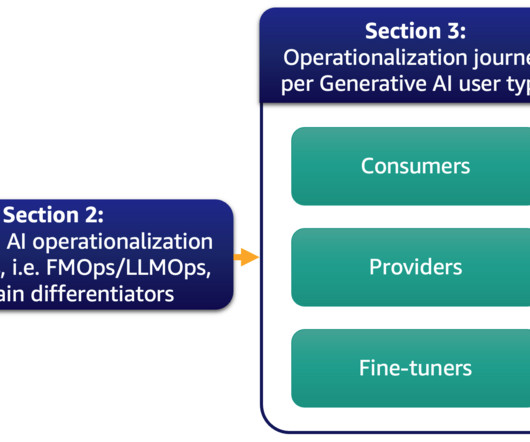

The objective of an ML Platform is to automate repetitive tasks and streamline the processes starting from data preparation to model deployment and monitoring. In this section, I will talk about best practices around building the Data Processing platform. How to set up an ML Platform in eCommerce?

MARCH 21, 2025

Traditionally, answering this question would involve multiple data exports, complex extract, transform, and load (ETL) processes, and careful data synchronization across systems. Users can write data to managed RMS tables using Iceberg APIs, Amazon Redshift, or Zero-ETL ingestion from supported data sources.

Kaggle

JULY 29, 2020

In August 2019, Data Works was acquired and Dave worked to ensure a successful transition. David: My technical background is in ETL, data extraction, data engineering and data analytics. An ETL process was built to take the CSV, find the corresponding text articles and load the data into a SQLite database.

IBM Journey to AI blog

OCTOBER 30, 2024

IBM watsonx.data facilitates scalable analytics and AI endeavors by accommodating data from diverse sources, eliminating the need for migration or cataloging through open formats. This approach enables centralized access and sharing while minimizing extract, transform and load (ETL) processes and data duplication.

AWS Machine Learning Blog

FEBRUARY 18, 2025

To handle the log data efficiently, raw logs were centralized into an Amazon Simple Storage Service (Amazon S3) bucket. An Amazon EventBridge schedule checked this bucket hourly for new files and triggered log transformation extract, transform, and load (ETL) pipelines built using AWS Glue and Apache Spark.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content