How to Delete Duplicate Rows in SQL?

Analytics Vidhya

AUGUST 28, 2024

Whether you’re cleaning up customer lists, transaction logs, or other datasets, removing duplicate rows is vital for maintaining data quality. appeared first on Analytics Vidhya.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Analytics Vidhya

AUGUST 28, 2024

Whether you’re cleaning up customer lists, transaction logs, or other datasets, removing duplicate rows is vital for maintaining data quality. appeared first on Analytics Vidhya.

Machine Learning Research at Apple

MARCH 23, 2025

We identify two largely unaddressed limitations in current open benchmarks: (1) data quality issues in the evaluation data mainly attributed to the lack of capturing the probabilistic nature of translating a natural language description into a structured query (e.g.,

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

IBM Data Science in Practice

JANUARY 2, 2025

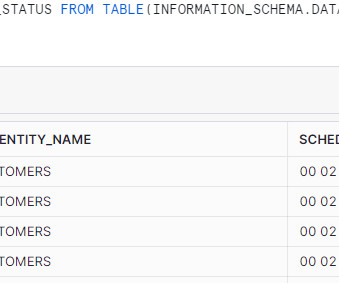

By creating microsegments, businesses can be alerted to surprises, such as sudden deviations or emerging trends, empowering them to respond proactively and make data-driven decisions. SQL AssetCreation For each selected value, the system dynamically generates a separate SQL asset. For this example, choose MaritalStatus.

IBM Data Science in Practice

APRIL 26, 2024

In this blog, we explore how the introduction of SQL Asset Type enhances the metadata enrichment process within the IBM Knowledge Catalog , enhancing data governance and consumption. Introducing SQL Asset Type A significant enhancement to the metadata enrichment process is the introduction of SQL Asset Type.

Alation

MAY 24, 2022

generally available on May 24, Alation introduces the Open Data Quality Initiative for the modern data stack, giving customers the freedom to choose the data quality vendor that’s best for them with the added confidence that those tools will integrate seamlessly with Alation’s Data Catalog and Data Governance application.

IBM Journey to AI blog

JANUARY 5, 2023

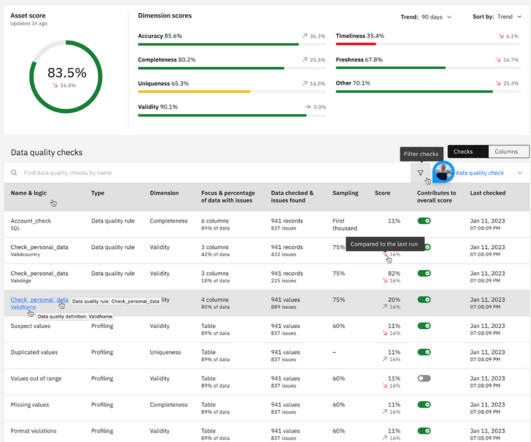

Poor data quality is one of the top barriers faced by organizations aspiring to be more data-driven. Ill-timed business decisions and misinformed business processes, missed revenue opportunities, failed business initiatives and complex data systems can all stem from data quality issues.

DagsHub

AUGUST 23, 2024

As such, the quality of their data can make or break the success of the company. This article will guide you through the concept of a data quality framework, its essential components, and how to implement it effectively within your organization. What is a data quality framework?

Alation

MAY 24, 2022

In a sea of questionable data, how do you know what to trust? Data quality tells you the answer. It signals what data is trustworthy, reliable, and safe to use. It empowers engineers to oversee data pipelines that deliver trusted data to the wider organization. Today, as part of its 2022.2

Pickl AI

APRIL 21, 2025

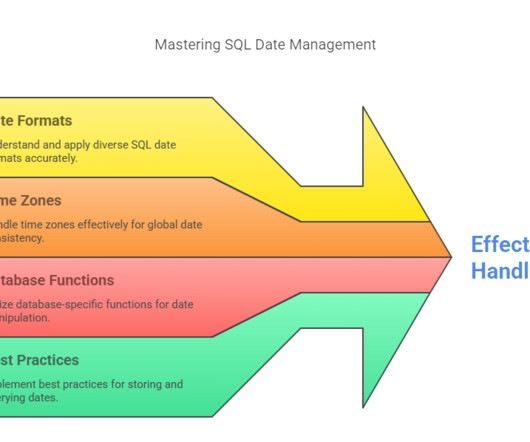

Summary: Mastering date format sql is crucial for accurate data storage and retrieval. However, working with dates in SQL can sometimes be tricky due to varying formats, time zones, and database-specific functions. Use native SQL date/time data types instead of strings for better performance.

phData

OCTOBER 25, 2024

“Quality over Quantity” is a phrase we hear regularly in life, but when it comes to the world of data, we often fail to adhere to this rule. Data Quality Monitoring implements quality checks in operational data processes to ensure that the data meets pre-defined standards and business rules.

Data Science Dojo

JULY 6, 2023

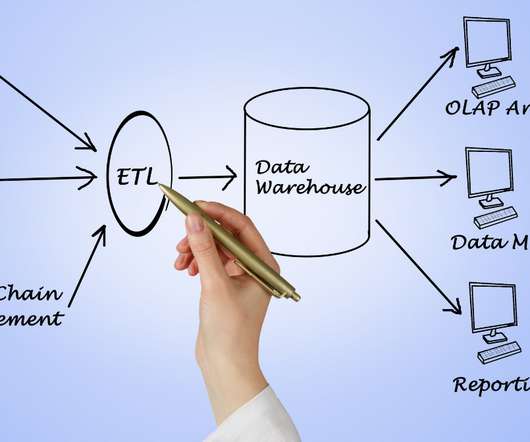

These tools provide data engineers with the necessary capabilities to efficiently extract, transform, and load (ETL) data, build data pipelines, and prepare data for analysis and consumption by other applications. Essential data engineering tools for 2023 Top 10 data engineering tools to watch out for in 2023 1.

AWS Machine Learning Blog

APRIL 3, 2025

This tool democratizes data access across the organization, enabling even nontechnical users to gain valuable insights. A standout application is the SQL-to-natural language capability, which translates complex SQL queries into plain English and vice versa, bridging the gap between technical and business teams.

Pickl AI

DECEMBER 15, 2024

Explore popular data warehousing tools and their features. Emphasise the importance of data quality and security measures. Data Warehouse Interview Questions and Answers Explore essential data warehouse interview questions and answers to enhance your preparation for 2025. Explain the Concept of a Data Mart.

AWS Machine Learning Blog

DECEMBER 11, 2024

Using the services built-in source connectors standardizes and simplifies the work needed to maintain data quality and manage the overall data lifecycle. This will enable teams across all roles to ask detailed questions about their customer and partner accounts, territories, leads and contacts, and sales pipeline.

Data Science Dojo

OCTOBER 10, 2023

Link to event -> IMPACT 2o23 Key topics covered IMPACT brings together the data community to showcase the latest and greatest trends, technologies, and processes in data quality, large-language models, data and AI governance, and of course, data observability. Link to event -> Live!

AWS Machine Learning Blog

OCTOBER 9, 2024

The service, which was launched in March 2021, predates several popular AWS offerings that have anomaly detection, such as Amazon OpenSearch , Amazon CloudWatch , AWS Glue Data Quality , Amazon Redshift ML , and Amazon QuickSight. You can review the recommendations and augment rules from over 25 included data quality rules.

Dataconomy

OCTOBER 8, 2024

Some of the challenges include discrepancies in the data, inaccurate data, corrupted data and security vulnerabilities. Adding to these headaches, it can be tricky for developers to identify the source of their inaccurate or corrupted data, which complicates efforts to maintain data quality.

Smart Data Collective

OCTOBER 20, 2020

It may seem like a considerable investment, but beefing up your defenses prevents critical data from falling into the wrong hands. Consider adding a web application firewall that prevents the injection of damaging SQL commands that will destabilize your database. More importantly, you need to cleanse your SQL server of old code.

AWS Machine Learning Blog

AUGUST 12, 2024

AWS data engineering pipeline The adaptable approach detailed in this post starts with an automated data engineering pipeline to make data stored in Splunk available to a wide range of personas, including business intelligence (BI) analysts, data scientists, and ML practitioners, through a SQL interface.

Smart Data Collective

APRIL 29, 2020

Redshift is the product for data warehousing, and Athena provides SQL data analytics. AWS Glue helps users to build data catalogues, and Quicksight provides data visualisation and dashboard construction. Dataform is a data transformation platform that is based on SQL.

The MLOps Blog

JUNE 27, 2023

Data quality control: Robust dataset labeling and annotation tools incorporate quality control mechanisms such as inter-annotator agreement analysis, review workflows, and data validation checks to ensure the accuracy and reliability of annotations. Data monitoring tools help monitor the quality of the data.

Alation

MAY 27, 2021

Rather than locking the data away from those who need it, this approach instead welcomes more users to the data — but adds guardrails to guide use. Deprecation warnings, SQL AutoSuggest, and quality flags are examples of “guardrail features.” Improve data quality by formalizing accountability for metadata.

phData

FEBRUARY 2, 2024

This evolved into the phData Toolkit , a collection of high-quality data applications to help you migrate, validate, optimize, and secure your data. Learn more about the phData Toolkit What is the Data Source Tool? This would be ideal to run regularly to track what your database looked like historically.

Data Science Dojo

JANUARY 12, 2023

Insights of data warehouse A data warehouse is a database designed for the analysis of relational data from corporate applications and transactional systems. The results of rapid SQL queries are often utilized for operational reporting and analysis; thus, the data structure and schema are set in advance to optimize for this.

Pickl AI

DECEMBER 25, 2024

Descriptive analytics is a fundamental method that summarizes past data using tools like Excel or SQL to generate reports. Techniques such as data cleansing, aggregation, and trend analysis play a critical role in ensuring data quality and relevance. Data Scientists rely on technical proficiency.

Tableau

FEBRUARY 17, 2021

You can now connect to your data in Azure SQL Database (with Azure Active Directory) and Azure Data Lake Gen 2. First, we’ve added automated data quality warnings (DQW) , which are automatically created when an extract refresh or Tableau Prep flow run fails. Microsoft Azure connectivity improvements.

ODSC - Open Data Science

MARCH 13, 2023

Some of the issues make perfect sense as they relate to data quality, with common issues being bad/unclean data and data bias. What are the biggest challenges in machine learning? select all that apply) Related to the previous question, these are a few issues faced in machine learning.

AWS Machine Learning Blog

APRIL 24, 2023

Data Wrangler has more than 300 preconfigured data transformations that can effectively be used in transforming the data. In addition, you can write custom transformation in PySpark, SQL, and pandas. Refer to Get Insights On Data and Data Quality for more information. For Target column , choose label.

Pickl AI

MARCH 3, 2025

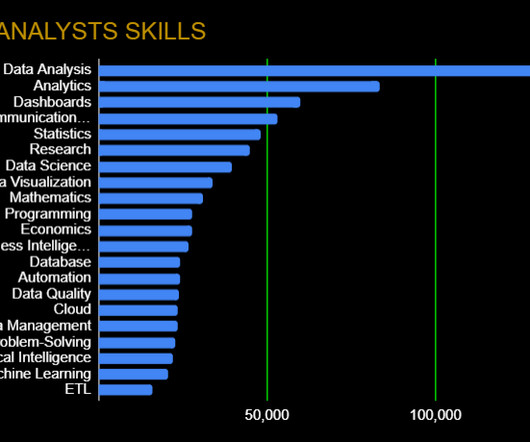

Summary: Business Intelligence Analysts transform raw data into actionable insights. They use tools and techniques to analyse data, create reports, and support strategic decisions. Key skills include SQL, data visualization, and business acumen. Introduction We are living in an era defined by data.

phData

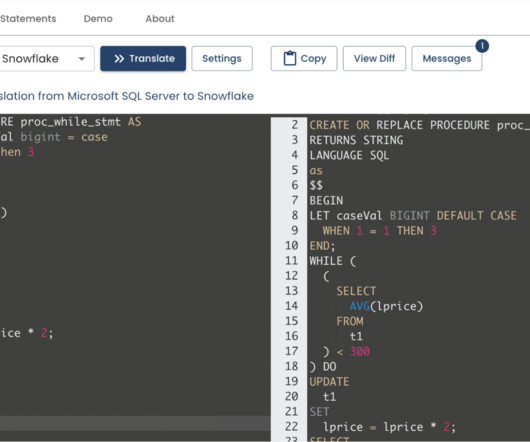

MARCH 1, 2023

The first one we want to talk about is the Toolkit SQL analyze command. When customers are looking to perform a migration, one of the first things that needs to occur is an assessment of the level of effort to migrate existing data definition language (DDL) and data markup language (DML).

Precisely

AUGUST 15, 2024

Address common challenges in managing SAP master data by using AI tools to automate SAP processes and ensure data quality. Create an AI-driven data and process improvement loop to continuously enhance your business operations. Think about material master data, for example. Data creation and management processes.

Pickl AI

NOVEMBER 4, 2024

Key components of data warehousing include: ETL Processes: ETL stands for Extract, Transform, Load. This process involves extracting data from multiple sources, transforming it into a consistent format, and loading it into the data warehouse. ETL is vital for ensuring data quality and integrity.

Smart Data Collective

SEPTEMBER 26, 2021

The way in which you store data impacts ease of access, use, not to mention security. Choosing the right data storage model for your requirements is paramount. There are countless implementations to choose from, including SQL and NoSQL databases. A NoSQl database can use documents for the storage and retrieval of data.

ODSC - Open Data Science

FEBRUARY 24, 2023

Data Quality Now that you’ve learned more about your data and cleaned it up, it’s time to ensure the quality of your data is up to par. With these data exploration tools, you can determine if your data is accurate, consistent, and reliable.

Dataconomy

AUGUST 7, 2023

Beyond its performance merits, Couchbase also integrates Big Data and SQL functionalities, positioning it as a multifaceted solution for complex AI and ML tasks. This blend of features makes it an attractive option for applications requiring real-time data access and analytical capabilities.

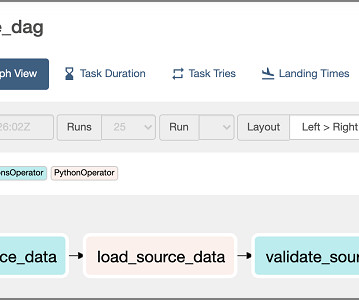

phData

AUGUST 10, 2023

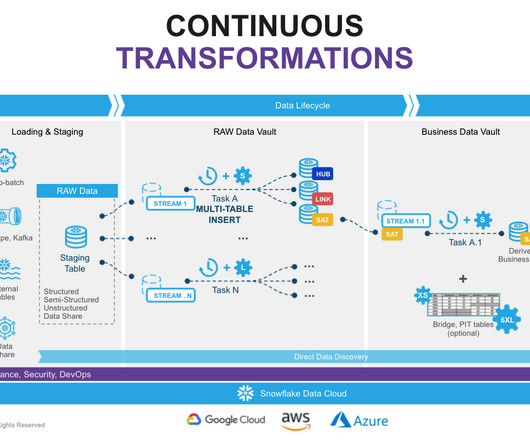

That said, dbt provides the ability to generate data vault models and also allows you to write your data transformations using SQL and code-reusable macros powered by Jinja2 to run your data pipelines in a clean and efficient way. The most important reason for using DBT in Data Vault 2.0

phData

AUGUST 22, 2024

Snowflake Cortex stood out as the ideal choice for powering the model due to its direct access to data, intuitive functionality, and exceptional performance in handling SQL tasks. I used a demo project that I frequently work with and introduced syntax errors and data quality problems.

Alation

JUNE 30, 2021

Intelligent SQL Editor. Compose, Alation’s intelligent SQL editor, offers a number of user-friendly features GigaOm highlights as useful: “Compose [is] Alation’s intelligent SQL query tool, which walks users through writing SQL queries, providing inline ML-based recommendations called SmartSuggestions.”.

Snorkel AI

JUNE 2, 2023

As users integrate more sources of knowledge, the platform enables them to rapidly improve training data quality and model performance using integrated error analysis tools. This connector makes clients’ Databricks data accessible to Snorkel Flow with just a few clicks. Enter Databricks SQL connection details and credentials.

Snorkel AI

JUNE 2, 2023

As users integrate more sources of knowledge, the platform enables them to rapidly improve training data quality and model performance using integrated error analysis tools. This connector makes clients’ Databricks data accessible to Snorkel Flow with just a few clicks. Enter Databricks SQL connection details and credentials.

Pickl AI

APRIL 21, 2025

Real-World Example: Healthcare systems manage a huge variety of data: structured patient demographics, semi-structured lab reports, and unstructured doctor’s notes, medical images (X-rays, MRIs), and even data from wearable health monitors. Ensuring data quality and accuracy is a major challenge.

ODSC - Open Data Science

APRIL 3, 2023

Skills like effective verbal and written communication will help back up the numbers, while data visualization (specific frameworks in the next section) can help you tell a complete story. Data Wrangling: Data Quality, ETL, Databases, Big Data The modern data analyst is expected to be able to source and retrieve their own data for analysis.

phData

FEBRUARY 5, 2024

Setting up the Information Architecture Setting up an information architecture during migration to Snowflake poses challenges due to the need to align existing data structures, types, and sources with Snowflake’s multi-cluster, multi-tier architecture. Moving historical data from a legacy system to Snowflake poses several challenges.

Towards AI

AUGUST 27, 2024

Additionally, supervised data in chat format was used to align the model with human preferences on instruct-following, truthfulness, honesty, and helpfulness. The focus on data quality was paramount. A lot of time is spent on gathering and cleaning the training data for LLMs, yet the end result is often still raw/dirty.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content