10 Free Machine Learning Courses from Top Universities

FEBRUARY 2, 2023

Learn the basics of machine learning, including classification, SVM, decision tree learning, neural networks, convolutional, neural networks, boosting, and K nearest neighbors.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

FEBRUARY 2, 2023

Learn the basics of machine learning, including classification, SVM, decision tree learning, neural networks, convolutional, neural networks, boosting, and K nearest neighbors.

Data Science Dojo

FEBRUARY 15, 2023

Zheng’s “Guide to Data Structures and Algorithms” Parts 1 and Part 2 1) Big O Notation 2) Search 3) Sort 3)–i)–Quicksort 3)–ii–Mergesort 4) Stack 5) Queue 6) Array 7) Hash Table 8) Graph 9) Tree (e.g.,

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Data Science Dojo

MAY 27, 2024

Some common models used are as follows: Logistic Regression – it classifies by predicting the probability of a data point belonging to a class instead of a continuous value Decision Trees – uses a tree structure to make predictions by following a series of branching decisions Support Vector Machines (SVMs) – create a clear decision (..)

Dataconomy

MARCH 4, 2025

Classification Classification techniques, including decision trees, categorize data into predefined classes. Decision trees and K-nearest neighbors (KNN) Both decision trees and KNN play vital roles in classification and prediction.

Data Science Dojo

FEBRUARY 14, 2024

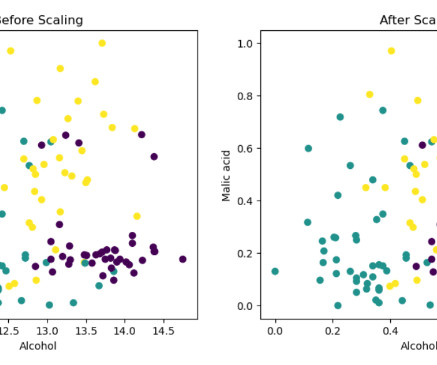

However, it can be very effective when you are working with multivariate analysis and similar methods, such as Principal Component Analysis (PCA), Support Vector Machine (SVM), K-means, Gradient Descent, Artificial Neural Networks (ANN), and K-nearest neighbors (KNN).

Pickl AI

APRIL 13, 2025

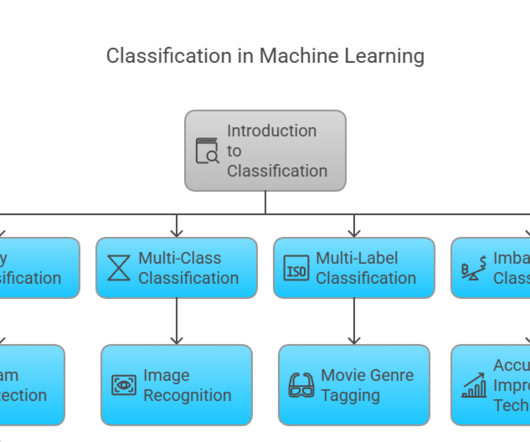

Summary: Classifier in Machine Learning involves categorizing data into predefined classes using algorithms like Logistic Regression and Decision Trees. It’s crucial for applications like spam detection, disease diagnosis, and customer segmentation, improving decision-making and operational efficiency across various sectors.

Towards AI

MAY 1, 2024

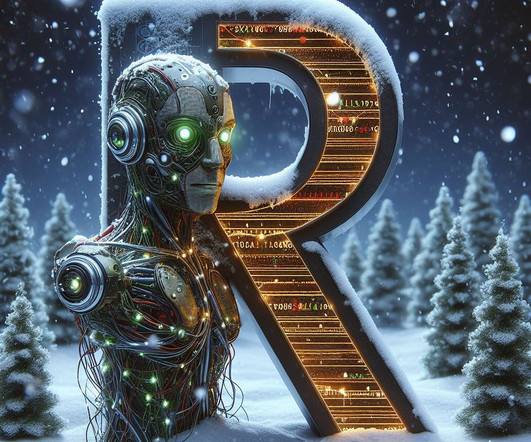

We shall look at various types of machine learning algorithms such as decision trees, random forest, K nearest neighbor, and naïve Bayes and how you can call their libraries in R studios, including executing the code. Decision Tree and R. Types of machine learning with R.

Pickl AI

JANUARY 21, 2025

Key examples include Linear Regression for predicting prices, Logistic Regression for classification tasks, and Decision Trees for decision-making. Decision Trees visualize decision-making processes for better understanding. Algorithms like k-NN classify data based on proximity to other points.

Towards AI

MAY 10, 2024

The three weak learner models used for this implementation were k-nearest neighbors, decision trees, and naive Bayes. For the meta-model, k-nearest neighbors were used again. A meta-model is trained on this second-level training data to produce the final predictions.

Data Science Dojo

JULY 15, 2024

decision trees, support vector regression) that can model even more intricate relationships between features and the target variable. Decision Trees: These work by asking a series of yes/no questions based on data features to classify data points. converting text to numerical features) is crucial for model performance.

Towards AI

JULY 3, 2024

For geographical analysis, Random Forest, Support Vector Machines (SVM), and k-nearest Neighbors (k-NN) are three excellent methods. The Reasons It’s Excellent -Objective: The project’s goal is to be efficient for both regression and classification, especially in cases where the decision boundary is complicated.

Towards AI

APRIL 4, 2024

Random Forest IBM states Leo Breiman and Adele Cutler are the trademark holders of the widely used machine learning technique known as “random forest,” which aggregates the output of several decision trees to produce a single conclusion.

Towards AI

APRIL 7, 2024

Created by the author with DALL E-3 Statistics, regression model, algorithm validation, Random Forest, K Nearest Neighbors and Naïve Bayes— what in God’s name do all these complicated concepts have to do with you as a simple GIS analyst? Author(s): Stephen Chege-Tierra Insights Originally published on Towards AI.

Dataconomy

APRIL 4, 2023

Some of the common types are: Linear Regression Deep Neural Networks Logistic Regression Decision Trees AI Linear Discriminant Analysis Naive Bayes Support Vector Machines Learning Vector Quantization K-nearest Neighbors Random Forest What do they mean? Often, these trees adhere to an elementary if/then structure.

Dataconomy

APRIL 4, 2023

Some of the common types are: Linear Regression Deep Neural Networks Logistic Regression Decision Trees AI Linear Discriminant Analysis Naive Bayes Support Vector Machines Learning Vector Quantization K-nearest Neighbors Random Forest What do they mean? Often, these trees adhere to an elementary if/then structure.

Towards AI

JULY 15, 2024

We shall look at various machine learning algorithms such as decision trees, random forest, K nearest neighbor, and naïve Bayes and how you can install and call their libraries in R studios, including executing the code.

Towards AI

JANUARY 26, 2024

It can take the values: [‘linear’, ‘poly’, ‘rbf’, ‘sigmoid’, ‘precomputed’].

Pickl AI

AUGUST 28, 2024

Examples include Logistic Regression, Support Vector Machines (SVM), Decision Trees, and Artificial Neural Networks. Instead, they memorise the training data and make predictions by finding the nearest neighbour. Examples include K-Nearest Neighbors (KNN) and Case-based Reasoning.

Towards AI

APRIL 14, 2023

The prediction is then done using a k-nearest neighbor method within the embedding space. Correctly predicting the tags of the questions is a very challenging problem as it involves the prediction of a large number of labels among several hundred thousand possible labels.

Pickl AI

JULY 31, 2023

Decision Trees : Decision Trees are another example of Eager Learning algorithms that recursively split the data based on feature values during training to create a tree-like structure for prediction. Instance Similarity : Lazy Learning algorithms use a similarity measure (e.g.,

IBM Journey to AI blog

DECEMBER 20, 2023

Classification algorithms include logistic regression, k-nearest neighbors and support vector machines (SVMs), among others. Naïve Bayes algorithms include decision trees , which can actually accommodate both regression and classification algorithms.

Mlearning.ai

FEBRUARY 2, 2023

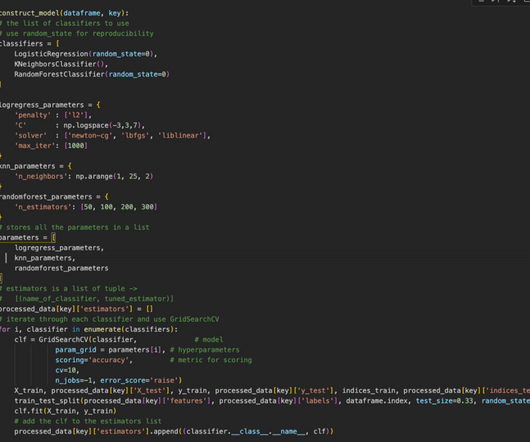

Define the classifiers: Choose a set of classifiers that you want to use, such as support vector machine (SVM), k-nearest neighbors (KNN), or decision tree, and initialize their parameters. bag of words or TF-IDF vectors) and splitting the data into training and testing sets.

Mlearning.ai

APRIL 6, 2023

For example, if you have binary or categorical data, you may want to consider using algorithms such as Logistic Regression, Decision Trees, or Random Forests. In contrast, for datasets with low dimensionality, simpler algorithms such as Naive Bayes or K-Nearest Neighbors may be sufficient.

Mlearning.ai

FEBRUARY 17, 2023

Simple linear regression Multiple linear regression Polynomial regression Decision Tree regression Support Vector regression Random Forest regression Classification is a technique to predict a category. It’s a fantastic world, trust me! You can also look at my GitHub portfolio to see the actual applications of some of them.

IBM Journey to AI blog

DECEMBER 19, 2023

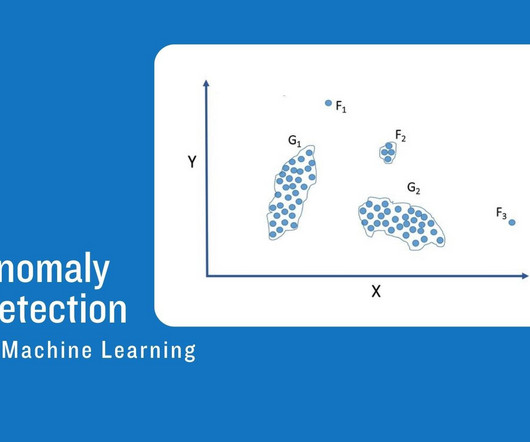

Common machine learning algorithms for supervised learning include: K-nearest neighbor (KNN) algorithm : This algorithm is a density-based classifier or regression modeling tool used for anomaly detection. Regression modeling is a statistical tool used to find the relationship between labeled data and variable data.

Pickl AI

DECEMBER 9, 2024

In contrast, decision trees assume data can be split into homogeneous groups through feature thresholds. Every Machine Learning algorithm, whether a decision tree, support vector machine, or deep neural network, inherently favours certain solutions over others.

ODSC - Open Data Science

JANUARY 5, 2024

Scikit-learn is also open-source, which makes it a popular choice for both academic and commercial use.

Mlearning.ai

NOVEMBER 29, 2023

K-Nearest Neighbor Regression Neural Network (KNN) The k-nearest neighbor (k-NN) algorithm is one of the most popular non-parametric approaches used for classification, and it has been extended to regression. Decision Trees ML-based decision trees are used to classify items (products) in the database.

Pickl AI

NOVEMBER 18, 2024

For example, linear regression is typically used to predict continuous variables, while decision trees are great for classification and regression tasks. Decision trees are easy to interpret but prone to overfitting. predicting house prices), Linear Regression, Decision Trees, or Random Forests could be good choices.

Pickl AI

SEPTEMBER 12, 2024

Decision Trees: A supervised learning algorithm that creates a tree-like model of decisions and their possible consequences, used for both classification and regression tasks. K K-Means Clustering: An unsupervised learning algorithm that partitions data into K distinct clusters based on feature similarity.

Pickl AI

SEPTEMBER 3, 2023

An ensemble of decision trees is trained on both normal and anomalous data. k-Nearest Neighbors (k-NN): In the supervised approach, k-NN assigns labels to instances based on their k-nearest neighbours.

Pickl AI

JULY 26, 2023

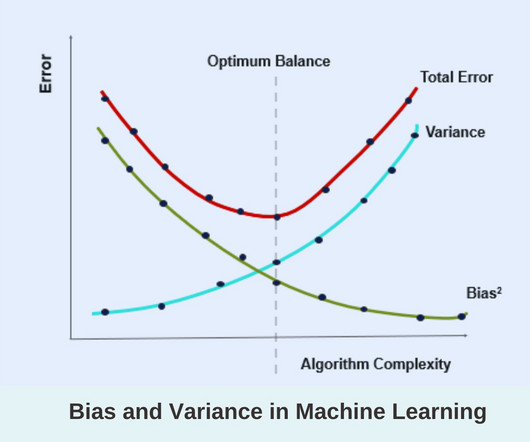

Here are some examples of variance in machine learning: Overfitting in Decision Trees Decision trees can exhibit high variance if they are allowed to grow too deep, capturing noise and outliers in the training data.

Mlearning.ai

FEBRUARY 15, 2023

Some important things that were considered during these selections were: Random Forest : The ultimate feature importance in a Random forest is the average of all decision tree feature importance. A random forest is an ensemble classifier that makes predictions using a variety of decision trees.

Mlearning.ai

MAY 23, 2023

Decision trees are more prone to overfitting. Some algorithms that have low bias are Decision Trees, SVM, etc. The K-Nearest Neighbor Algorithm is a good example of an algorithm with low bias and high variance. So, this is how we draw a typical decision tree. Let us see some examples.

The MLOps Blog

DECEMBER 19, 2022

1 KNN 2 Decision Tree 3 Random Forest 4 Naive Bayes 5 Deep Learning using Cross Entropy Loss To some extent, Logistic Regression and SVM can also be leveraged to solve a multi-class classification problem by fitting multiple binary classifiers using a one-vs-all or one-vs-one strategy. . Creating the index.

DagsHub

DECEMBER 23, 2024

They are: Based on shallow, simple, and interpretable machine learning models like support vector machines (SVMs), decision trees, or k-nearest neighbors (kNN). Relies on explicit decision boundaries or feature representations for sample selection.

Towards AI

JULY 19, 2023

Feel free to try other algorithms such as Random Forests, Decision Trees, Neural Networks, etc., among supervised models and k-nearest neighbors, DBSCAN, etc., among unsupervised models.

Dataconomy

SEPTEMBER 1, 2023

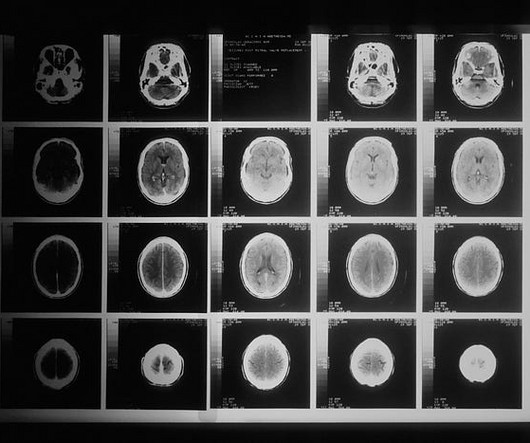

By combining, for example, a decision tree with a support vector machine (SVM), these hybrid models leverage the interpretability of decision trees and the robustness of SVMs to yield superior predictions in medicine. The decision tree algorithm used to select features is called the C4.5

Dataconomy

APRIL 25, 2025

Decision trees Decision trees represent a simple yet powerful algorithm for multi-class classification. They function by breaking down data into subsets based on feature values, ultimately leading to class label predictions at the leaves of the tree.

Dataconomy

MARCH 28, 2025

Decision trees: They segment data into branches based on sequential questioning. Random forest: Combines multiple decision trees to strengthen predictive capabilities. K-nearest neighbors (KNN): Classifies based on proximity to other data points.

Dataconomy

DECEMBER 12, 2024

K-Nearest Neighbors (KNN) : For small datasets, this can be a simple but effective way to identify file formats based on the similarity of their nearest neighbors. Overfitting can occur when the model uses too many features, causing it to make decisions faster, for example, at the endpoints of decision trees.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content