Remote Data Science Jobs: 5 High-Demand Roles for Career Growth

Data Science Dojo

OCTOBER 31, 2024

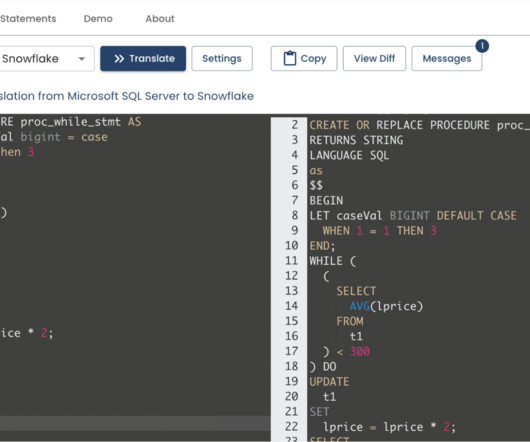

Strong analytical skills and the ability to work with large datasets are critical, as is familiarity with data modeling and ETL processes. Programming Questions Data science roles typically require knowledge of Python, SQL, R, or Hadoop. Prepare to discuss your experience and problem-solving abilities with these languages.

Let's personalize your content