Building a Scalable ETL with SQL + Python

KDnuggets

APRIL 21, 2022

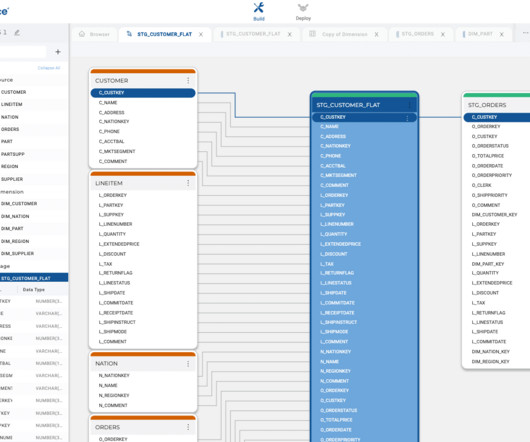

This post will look at building a modular ETL pipeline that transforms data with SQL and visualizes it with Python and R.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

KDnuggets

APRIL 21, 2022

This post will look at building a modular ETL pipeline that transforms data with SQL and visualizes it with Python and R.

KDnuggets

APRIL 27, 2022

A Brief Introduction to Papers With Code; Machine Learning Books You Need To Read In 2022; Building a Scalable ETL with SQL + Python; 7 Steps to Mastering SQL for Data Science; Top Data Science Projects to Build Your Skills.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Agent Tooling: Connecting AI to Your Tools, Systems & Data

Automation, Evolved: Your New Playbook for Smarter Knowledge Work

How to Modernize Manufacturing Without Losing Control

Mastering Apache Airflow® 3.0: What’s New (and What’s Next) for Data Orchestration

Data Science Dojo

OCTOBER 31, 2024

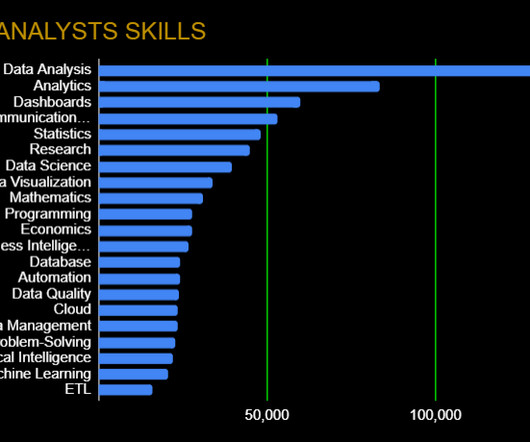

Key Skills Proficiency in SQL is essential, along with experience in data visualization tools such as Tableau or Power BI. Strong analytical skills and the ability to work with large datasets are critical, as is familiarity with data modeling and ETL processes. Familiarity with machine learning, algorithms, and statistical modeling.

Data Science Blog

SEPTEMBER 19, 2023

This brings reliability to data ETL (Extract, Transform, Load) processes, query performances, and other critical data operations. using for loops in Python). The following Terraform script will create an Azure Resource Group, a SQL Server, and a SQL Database. So why using IaC for Cloud Data Infrastructures?

AWS Machine Learning Blog

DECEMBER 14, 2023

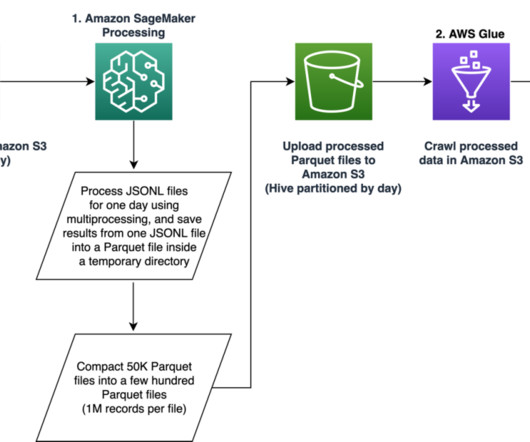

Our pipeline belongs to the general ETL (extract, transform, and load) process family that combines data from multiple sources into a large, central repository. The system includes feature engineering, deep learning model architecture design, hyperparameter optimization, and model evaluation, where all modules are run using Python.

AWS Machine Learning Blog

APRIL 16, 2024

They then use SQL to explore, analyze, visualize, and integrate data from various sources before using it in their ML training and inference. Previously, data scientists often found themselves juggling multiple tools to support SQL in their workflow, which hindered productivity.

Hacker News

NOVEMBER 19, 2024

Here are a few of the things that you might do as an AI Engineer at TigerEye: - Design, develop, and validate statistical models to explain past behavior and to predict future behavior of our customers’ sales teams - Own training, integration, deployment, versioning, and monitoring of ML components - Improve TigerEye’s existing metrics collection and (..)

ODSC - Open Data Science

APRIL 6, 2023

For budding data scientists and data analysts, there are mountains of information about why you should learn R over Python and the other way around. Though both are great to learn, what gets left out of the conversation is a simple yet powerful programming language that everyone in the data science world can agree on, SQL.

Data Science Dojo

MAY 10, 2023

Here, we outline the essential skills and qualifications that pave way for data science careers: Proficiency in Programming Languages – Mastery of programming languages such as Python, R, and SQL forms the foundation of a data scientist’s toolkit.

Data Science Dojo

JULY 6, 2023

These tools provide data engineers with the necessary capabilities to efficiently extract, transform, and load (ETL) data, build data pipelines, and prepare data for analysis and consumption by other applications. dbt focuses on transforming raw data into analytics-ready tables using SQL-based transformations.

How to Learn Machine Learning

APRIL 26, 2025

The processes of SQL, Python scripts, and web scraping libraries such as BeautifulSoup or Scrapy are used for carrying out the data collection. Tools like Python (with pandas and NumPy), R, and ETL platforms like Apache NiFi or Talend are used for data preparation before analysis.

DECEMBER 11, 2024

Data processing and SQL analytics Analyze, prepare, and integrate data for analytics and AI using Amazon Athena, Amazon EMR, AWS Glue, and Amazon Redshift. With SageMaker Unified Studio notebooks, you can use Python or Spark to interactively explore and visualize data, prepare data for analytics and ML, and train ML models.

Pickl AI

OCTOBER 17, 2024

Summary: The ETL process, which consists of data extraction, transformation, and loading, is vital for effective data management. Introduction The ETL process is crucial in modern data management. What is ETL? ETL stands for Extract, Transform, Load.

Pickl AI

NOVEMBER 4, 2024

Coming to APIs again, discover how to use ChatGPT APIs in Python by clicking on the link. Each database type requires its specific driver, which interprets the application’s SQL queries and translates them into a format the database can understand. INSERT : Add new records to a table. UPDATE : Modify existing records in a table.

Data Science Dojo

JULY 3, 2024

They cover a wide range of topics, ranging from Python, R, and statistics to machine learning and data visualization. Here’s a list of key skills that are typically covered in a good data science bootcamp: Programming Languages : Python : Widely used for its simplicity and extensive libraries for data analysis and machine learning.

NOVEMBER 24, 2023

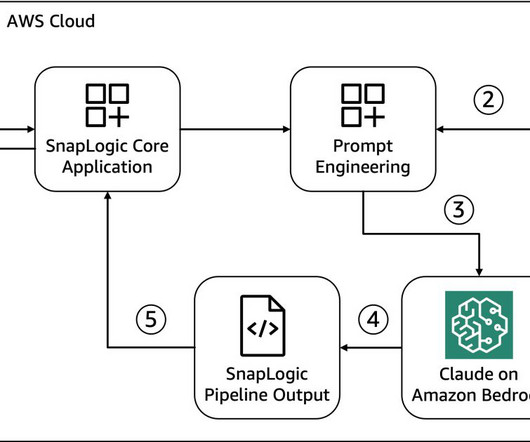

This use case highlights how large language models (LLMs) are able to become a translator between human languages (English, Spanish, Arabic, and more) and machine interpretable languages (Python, Java, Scala, SQL, and so on) along with sophisticated internal reasoning. Room for improvement!

Pickl AI

JUNE 7, 2024

Summary: Choosing the right ETL tool is crucial for seamless data integration. At the heart of this process lie ETL Tools—Extract, Transform, Load—a trio that extracts data, tweaks it, and loads it into a destination. Choosing the right ETL tool is crucial for smooth data management. What is ETL?

Pickl AI

NOVEMBER 5, 2024

It allows developers to easily connect to databases, execute SQL queries, and retrieve data. It operates as an intermediary, translating Java calls into SQL commands the database understands. ODBC uses standard SQL syntax, enabling different applications to communicate with databases regardless of the programming language.

Smart Data Collective

APRIL 29, 2020

Extraction, Transform, Load (ETL). Redshift is the product for data warehousing, and Athena provides SQL data analytics. It has useful features, such as an in-browser SQL editor for queries and data analysis, various data connectors for easy data ingestion, and automated data prepossessing and ingestion. Master data management.

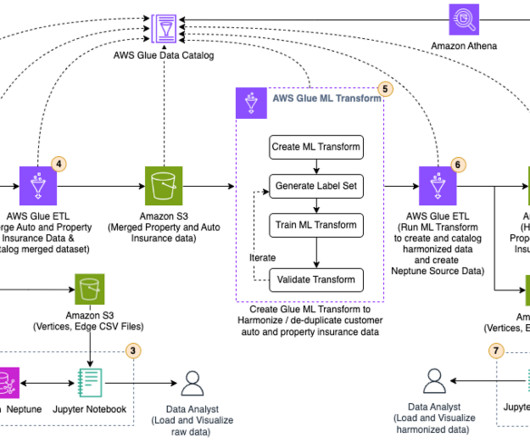

JUNE 26, 2023

Transform raw insurance data into CSV format acceptable to Neptune Bulk Loader , using an AWS Glue extract, transform, and load (ETL) job. Run an AWS Glue ETL job to merge the raw property and auto insurance data into one dataset and catalog the merged dataset. We use Python scripts to analyze the data in a Jupyter notebook.

Pickl AI

NOVEMBER 14, 2023

Looking for an effective and handy Python code repository in the form of Importing Data in Python Cheat Sheet? Your journey ends here where you will learn the essential handy tips quickly and efficiently with proper explanations which will make any type of data importing journey into the Python platform super easy.

phData

SEPTEMBER 2, 2024

In this blog, we will cover the best practices for developing jobs in Matillion, an ETL/ELT tool built specifically for cloud database platforms. Use of Python Component The Python component, including using Jython to connect to various databases, should not be used for resource-intensive data processing.

phData

APRIL 28, 2025

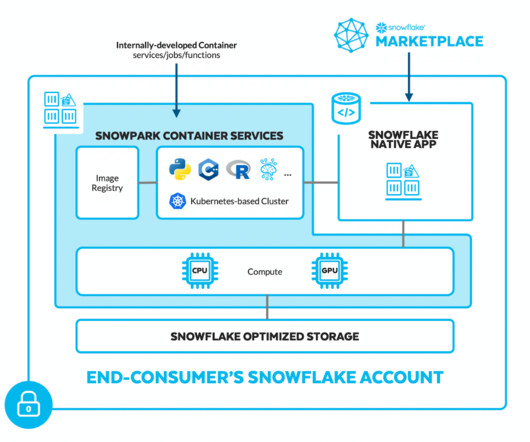

A Matillion pipeline is a collection of jobs that extract, load, and transform (ETL/ELT) data from various sources into a target system, such as a cloud data warehouse like Snowflake. Intuitive Workflow Design Workflows should be easy to follow and visually organized, much like clean, well-structured SQL or Python code.

AWS Machine Learning Blog

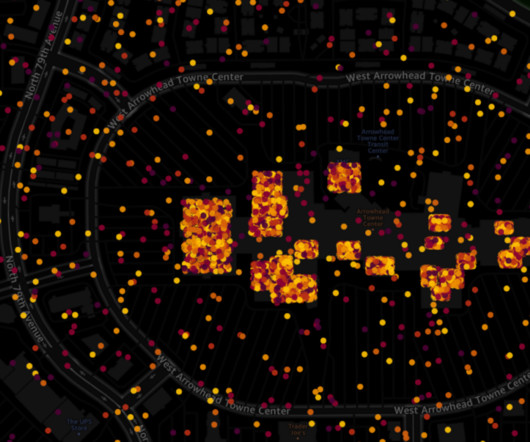

JANUARY 17, 2024

The GPU-powered interactive visualizer and Python notebooks provide a seamless way to explore millions of data points in a single window and share insights and results. As part of the initial ETL, this raw data can be loaded onto tables using AWS Glue. Sources and schema There are few sources of mobility data.

Mlearning.ai

APRIL 24, 2023

The Coursera class is direct to the point and gives concrete instructions about how to use the Azure Portal interface, Databricks, and the Python SDK; if you know nothing about Azure and need to use the service platform right away I highly recommend this course.

Pickl AI

JULY 25, 2023

They create data pipelines, ETL processes, and databases to facilitate smooth data flow and storage. With expertise in programming languages like Python , Java , SQL, and knowledge of big data technologies like Hadoop and Spark, data engineers optimize pipelines for data scientists and analysts to access valuable insights efficiently.

phData

AUGUST 8, 2024

Putting the T for Transformation in ELT (ETL) is essential to any data pipeline. They let you create virtual tables from the results of an SQL query. Stored Procedures In any data warehousing solution, stored procedures encapsulate SQL logic into repeatable routines, but Snowflake has some tricks up its sleeve.

phData

AUGUST 9, 2023

This is unlike the more traditional ETL method, where data is transformed before loading into the data warehouse. By bringing raw data into the data warehouse and then transforming it there, ELT provides more flexibility compared to ETL’s fixed pipelines. ETL systems just couldn’t handle the massive flows of raw data.

Applied Data Science

AUGUST 2, 2021

The most common data science languages are Python and R — SQL is also a must have skill for acquiring and manipulating data. They build production-ready systems using best-practice containerisation technologies, ETL tools and APIs. The Data Engineer Not everyone working on a data science project is a data scientist.

phData

FEBRUARY 7, 2024

Snowpark is the set of libraries and runtimes in Snowflake that securely deploy and process non-SQL code, including Python, Java, and Scala. On the server side, runtimes include Python, Java, and Scala in the warehouse model or Snowpark Container Services (public preview). filter(col("id") == 1).select(col("name"),

ODSC - Open Data Science

APRIL 3, 2023

Data Wrangling: Data Quality, ETL, Databases, Big Data The modern data analyst is expected to be able to source and retrieve their own data for analysis. Competence in data quality, databases, and ETL (Extract, Transform, Load) are essential. SQL excels with big data and statistics, making it important in order to query databases.

The MLOps Blog

SEPTEMBER 7, 2023

From writing code for doing exploratory analysis, experimentation code for modeling, ETLs for creating training datasets, Airflow (or similar) code to generate DAGs, REST APIs, streaming jobs, monitoring jobs, etc. Implementing these practices can enhance the efficiency and consistency of ETL workflows.

Smart Data Collective

MAY 20, 2019

For frameworks and languages, there’s SAS, Python, R, Apache Hadoop and many others. The popular tools, on the other hand, include Power BI, ETL, IBM Db2, and Teradata. SQL programming skills, specific tool experience — Tableau for example — and problem-solving are just a handful of examples.

Pickl AI

JULY 3, 2023

Here are steps you can follow to pursue a career as a BI Developer: Acquire a solid foundation in data and analytics: Start by building a strong understanding of data concepts, relational databases, SQL (Structured Query Language), and data modeling.

Pickl AI

MARCH 3, 2025

Key skills include SQL, data visualization, and business acumen. Essential skills include SQL, data visualization, and strong analytical abilities. Technical Skill Development Master SQL for database querying and manipulation. Learn programming languages like Python or R for advanced Data Analysis and automation.

phData

AUGUST 22, 2024

Snowflake Cortex stood out as the ideal choice for powering the model due to its direct access to data, intuitive functionality, and exceptional performance in handling SQL tasks. uses: actions/setup-python@v4 with: python-version: '3.10' - name: Install dependencies run: | python -m pip install --upgrade pip pip install -r./python/requirements.txt

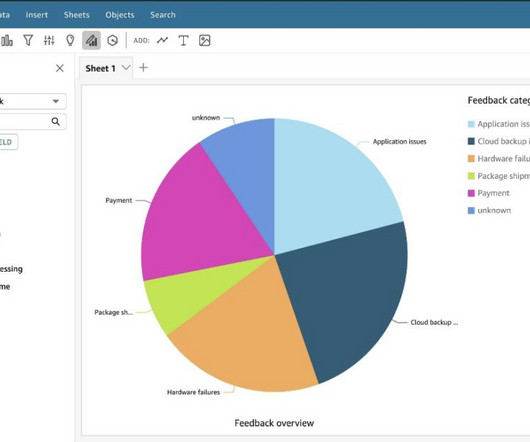

AWS Machine Learning Blog

JUNE 25, 2024

In addition, the generative business intelligence (BI) capabilities of QuickSight allow you to ask questions about customer feedback using natural language, without the need to write SQL queries or learn a BI tool. Prompt engineering To invoke Amazon Bedrock, you can follow our code sample that uses the Python SDK.

The MLOps Blog

OCTOBER 20, 2023

Example template for an exploratory notebook | Source: Author How to organize code in Jupyter notebook For exploratory tasks, the code to produce SQL queries, pandas data wrangling, or create plots is not important for readers. If a reviewer wants more detail, they can always look at the Python module directly. Redshift).

The MLOps Blog

JANUARY 26, 2024

Related article How to Build ETL Data Pipelines for ML See also MLOps and FTI pipelines testing Once you have built an ML system, you have to operate, maintain, and update it. All of them are written in Python. Python is the only prerequisite for the course, and your first ML system will consist of just three different Python scripts.

Pickl AI

APRIL 26, 2024

It covers essential topics such as SQL queries, data visualization, statistical analysis, machine learning concepts, and data manipulation techniques. Key Takeaways SQL Mastery: Understand SQL’s importance, join tables, and distinguish between SELECT and SELECT DISTINCT. How do you join tables in SQL?

Pickl AI

APRIL 6, 2023

Strong programming language skills in at least one of the languages like Python, Java, R, or Scala. Hands-on experience working with SQLDW and SQL-DB. Answer : Polybase helps optimize data ingestion into PDW and supports T-SQL. Sound knowledge of relational databases or NoSQL databases like Cassandra. What is Polybase?

Pickl AI

SEPTEMBER 19, 2024

It also supports ETL (Extract, Transform, Load) processes, making data warehousing and analytics essential. It provides Java, Scala, Python, and R APIs, making it accessible to many developers. Spark SQL Spark SQL is a module that works with structured and semi-structured data. What is Apache Spark?

ODSC - Open Data Science

JANUARY 18, 2024

Data scientists typically have strong skills in areas such as Python, R, statistics, machine learning, and data analysis. For example, if you’re a talented Python programmer, there may be other packages, libraries, and frameworks that you are familiar with. With that said, each skill may be used in a different manner.

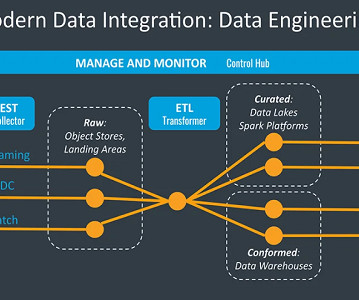

Alation

JANUARY 17, 2023

Reverse ETL tools. The modern data stack is also the consequence of a shift in analysis workflow, fromextract, transform, load (ETL) to extract, load, transform (ELT). A Note on the Shift from ETL to ELT. In the past, data movement was defined by ETL: extract, transform, and load. Extract, load, Transform (ELT) tools.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content