Building a Scalable ETL with SQL + Python

KDnuggets

APRIL 21, 2022

This post will look at building a modular ETL pipeline that transforms data with SQL and visualizes it with Python and R.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

KDnuggets

APRIL 21, 2022

This post will look at building a modular ETL pipeline that transforms data with SQL and visualizes it with Python and R.

KDnuggets

JANUARY 19, 2023

In this article, we will discuss use cases and methods for using ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes along with SQL to integrate data from various sources.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Analytics Vidhya

APRIL 29, 2022

Introduction Azure data factory (ADF) is a cloud-based ETL (Extract, Transform, Load) tool and data integration service which allows you to create a data-driven workflow. The post From Blob Storage to SQL Database Using Azure Data Factory appeared first on Analytics Vidhya. In this article, I’ll show […].

NOVEMBER 27, 2024

While customers can perform some basic analysis within their operational or transactional databases, many still need to build custom data pipelines that use batch or streaming jobs to extract, transform, and load (ETL) data into their data warehouse for more comprehensive analysis. Create dbt models in dbt Cloud.

Analytics Vidhya

FEBRUARY 21, 2023

Introduction SQL is a database programming language created for managing and retrieving data from Relational databases like MySQL, Oracle, and SQL Server. SQL(Structured Query Language) is the common language for all databases. In other terms, SQL is a language that communicates with databases.

KDnuggets

APRIL 27, 2022

A Brief Introduction to Papers With Code; Machine Learning Books You Need To Read In 2022; Building a Scalable ETL with SQL + Python; 7 Steps to Mastering SQL for Data Science; Top Data Science Projects to Build Your Skills.

IBM Data Science in Practice

JANUARY 13, 2025

By Santhosh Kumar Neerumalla , Niels Korschinsky & Christian Hoeboer Introduction This blogpost describes how to manage and orchestrate high volume Extract-Transform-Load (ETL) loads using a serverless process based on Code Engine. Thus, we use an Extract-Transform-Load (ETL) process to ingest the data.

KDnuggets

NOVEMBER 15, 2021

Learn how to level up your Data Pipelines!

Data Science Blog

MAY 20, 2024

It also supports a wide range of data warehouses, analytical databases, data lakes, frontends, and pipelines/ETL. This includes the creation of SQL Code, DACPAC files, SSIS packages, Data Factory ARM templates, and XMLA files. Pipelines/ETL : It supports SQL Server Integration Packages (SSIS), Azure Data Factory 2.0

Smart Data Collective

SEPTEMBER 8, 2021

The ETL process is defined as the movement of data from its source to destination storage (typically a Data Warehouse) for future use in reports and analyzes. Understanding the ETL Process. Before you understand what is ETL tool , you need to understand the ETL Process first. Types of ETL Tools.

Hacker News

NOVEMBER 24, 2024

Typed, declarative ETL and query language that compiles to SQL.

Dataversity

OCTOBER 16, 2024

Structured query language (SQL) is one of the most popular programming languages, with nearly 52% of programmers using it in their work. SQL has outlasted many other programming languages due to its stability and reliability.

KDnuggets

NOVEMBER 15, 2021

Learn how to level up your Data Pipelines!

Data Science Dojo

OCTOBER 31, 2024

Key Skills Proficiency in SQL is essential, along with experience in data visualization tools such as Tableau or Power BI. Strong analytical skills and the ability to work with large datasets are critical, as is familiarity with data modeling and ETL processes. Familiarity with machine learning, algorithms, and statistical modeling.

Analytics Vidhya

AUGUST 29, 2022

This requires developing a lot of ETL jobs and transforming the data to guarantee a consistent structure for making it available at any next step in the […]. This article was published as a part of the Data Science Blogathon. The post Understand Apache Drill and its Working appeared first on Analytics Vidhya.

Smart Data Collective

AUGUST 4, 2022

One of the biggest challenges they face is managing their SQL servers. When dealing with Structured Query Language (SQL) and programming in general knowing the data types available to you in a given framework is pivotal to being efficient at your job. . In SQL Server this comes in the form of the CAST command. Problem Statement.

Analytics Vidhya

FEBRUARY 20, 2023

Introduction Azure data factory (ADF) is a cloud-based data ingestion and ETL (Extract, Transform, Load) tool. The data-driven workflow in ADF orchestrates and automates data movement and data transformation.

AWS Machine Learning Blog

APRIL 16, 2024

They then use SQL to explore, analyze, visualize, and integrate data from various sources before using it in their ML training and inference. Previously, data scientists often found themselves juggling multiple tools to support SQL in their workflow, which hindered productivity.

Data Science Blog

SEPTEMBER 19, 2023

This brings reliability to data ETL (Extract, Transform, Load) processes, query performances, and other critical data operations. The following Terraform script will create an Azure Resource Group, a SQL Server, and a SQL Database. So why using IaC for Cloud Data Infrastructures?

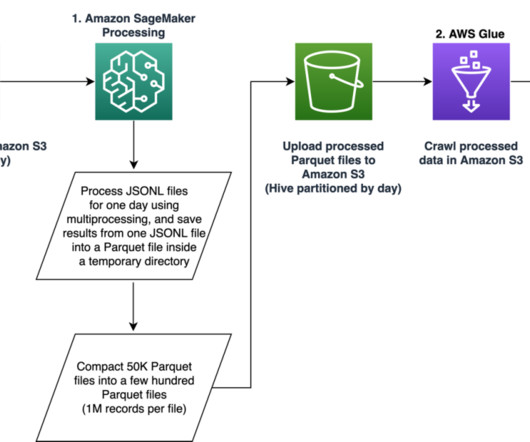

AWS Machine Learning Blog

DECEMBER 14, 2023

Our pipeline belongs to the general ETL (extract, transform, and load) process family that combines data from multiple sources into a large, central repository. The solution does not require porting the feature extraction code to use PySpark, as required when using AWS Glue as the ETL solution. session.Session().region_name

IBM Journey to AI blog

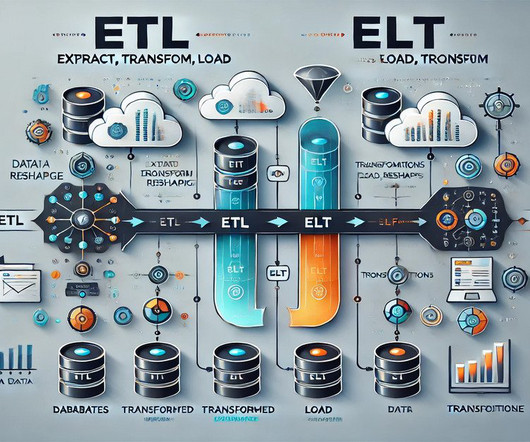

MAY 15, 2024

Two of the more popular methods, extract, transform, load (ETL ) and extract, load, transform (ELT) , are both highly performant and scalable. ETL/ELT tools typically have two components: a design time (to design data integration jobs) and a runtime (to execute data integration jobs).

Hacker News

MARCH 6, 2025

High-performance, low-footprint SQL database written in C++. Supports powerful features like JOIN, CDC, UPSERT, and LOOKUP, enabling real-time analytics and ETL at scale. Process millions of rows per second from Kafka, Pulsar, or ClickHouse, and seamlessly write results back.

Hacker News

NOVEMBER 19, 2024

Here are a few of the things that you might do as an AI Engineer at TigerEye: - Design, develop, and validate statistical models to explain past behavior and to predict future behavior of our customers’ sales teams - Own training, integration, deployment, versioning, and monitoring of ML components - Improve TigerEye’s existing metrics collection and (..)

ODSC - Open Data Science

APRIL 6, 2023

Though both are great to learn, what gets left out of the conversation is a simple yet powerful programming language that everyone in the data science world can agree on, SQL. But why is SQL, or Structured Query Language , so important to learn? Let’s start with the first clause often learned by new SQL users, the WHERE clause.

AWS Machine Learning Blog

NOVEMBER 20, 2024

She has experience across analytics, big data, ETL, cloud operations, and cloud infrastructure management. He has experience across analytics, big data, and ETL. In the Configure VPC and security group section, choose the VPC and subnets where your Aurora MySQL database is located, and choose the default VPC security group.

Smart Data Collective

MAY 31, 2022

It Started Reverse ETL. ETL is the source of its origin. To understand how data activation is unique and where it can help your business in powerful ways, you have to start with reverse ETL. To understand how data activation is unique and where it can help your business in powerful ways, you have to start with reverse ETL.

Data Science Blog

JULY 20, 2024

Automatisierung: Erstellt SQL-Code, DACPAC-Dateien, SSIS-Pakete, Data Factory-ARM-Vorlagen und XMLA-Dateien. Vielfältige Unterstützung: Kompatibel mit verschiedenen Datenbankmanagementsystemen wie MS SQL Server und Azure Synapse Analytics. Data Lakes: Unterstützt MS Azure Blob Storage.

phData

MARCH 14, 2024

Two popular players in this area are Alteryx Designer and Matillion ETL , both offering strong solutions for handling data workflows with Snowflake Data Cloud integration. Matillion ETL is purpose-built for the cloud, operating smoothly on top of your chosen data warehouse. Today we will focus on Snowflake as our cloud product.

Pickl AI

DECEMBER 15, 2024

Familiarise yourself with ETL processes and their significance. ETL Process: Extract, Transform, Load processes that prepare data for analysis. Can You Explain the ETL Process? The ETL process involves three main steps: Extract: Data is collected from various sources. How Do You Ensure Data Quality in a Data Warehouse?

Pickl AI

OCTOBER 17, 2024

Summary: The ETL process, which consists of data extraction, transformation, and loading, is vital for effective data management. Introduction The ETL process is crucial in modern data management. What is ETL? ETL stands for Extract, Transform, Load.

Pickl AI

OCTOBER 6, 2024

Summary: This blog explores the key differences between ETL and ELT, detailing their processes, advantages, and disadvantages. This blog explores the fundamental concepts of ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform), two pivotal methods in modern data architectures. What is ETL?

Dataversity

MARCH 26, 2024

Writing data to an AWS data lake and retrieving it to populate an AWS RDS MS SQL database involves several AWS services and a sequence of steps for data transfer and transformation. This process leverages AWS S3 for the data lake storage, AWS Glue for ETL operations, and AWS Lambda for orchestration.

Pickl AI

OCTOBER 17, 2024

Summary: This article explores the significance of ETL Data in Data Management. It highlights key components of the ETL process, best practices for efficiency, and future trends like AI integration and real-time processing, ensuring organisations can leverage their data effectively for strategic decision-making.

Data Science Dojo

JULY 6, 2023

These tools provide data engineers with the necessary capabilities to efficiently extract, transform, and load (ETL) data, build data pipelines, and prepare data for analysis and consumption by other applications. dbt focuses on transforming raw data into analytics-ready tables using SQL-based transformations.

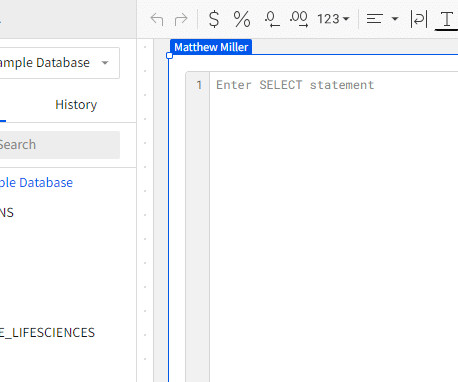

DECEMBER 11, 2024

Data processing and SQL analytics Analyze, prepare, and integrate data for analytics and AI using Amazon Athena, Amazon EMR, AWS Glue, and Amazon Redshift. With the SQL editor, you can query data lakes, databases, data warehouses, and federated data sources. In the next cell, switch the connection type from PySpark to SQL.

Pickl AI

JUNE 7, 2024

Summary: Choosing the right ETL tool is crucial for seamless data integration. At the heart of this process lie ETL Tools—Extract, Transform, Load—a trio that extracts data, tweaks it, and loads it into a destination. Choosing the right ETL tool is crucial for smooth data management. What is ETL?

Pickl AI

NOVEMBER 4, 2024

Each database type requires its specific driver, which interprets the application’s SQL queries and translates them into a format the database can understand. The driver manages the connection to the database, processes SQL commands, and retrieves the resulting data. INSERT : Add new records to a table.

Data Science Dojo

MAY 10, 2023

Here, we outline the essential skills and qualifications that pave way for data science careers: Proficiency in Programming Languages – Mastery of programming languages such as Python, R, and SQL forms the foundation of a data scientist’s toolkit.

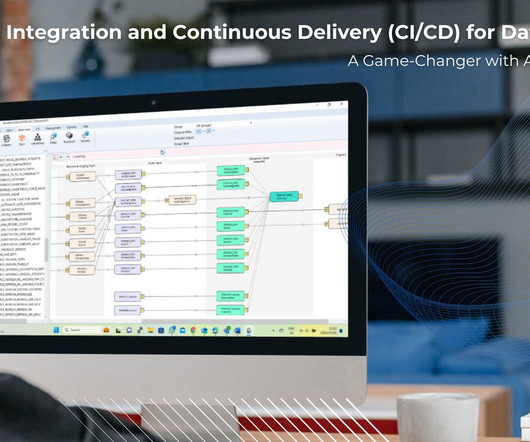

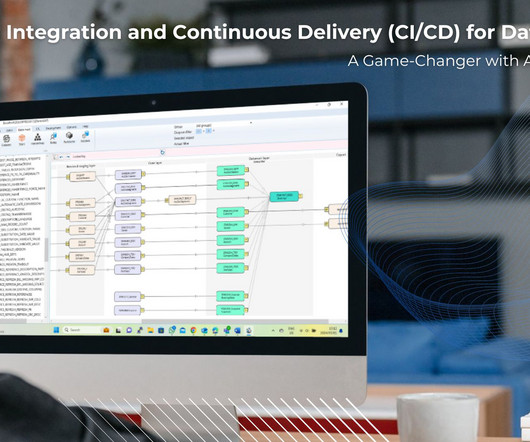

phData

JULY 12, 2023

Unlike traditional methods that rely on complex SQL queries for orchestration, Matillion Jobs provides a more streamlined approach. By converting SQL scripts into Matillion Jobs , users can take advantage of the platform’s advanced features for job orchestration, scheduling, and sharing. What is Matillion ETL?

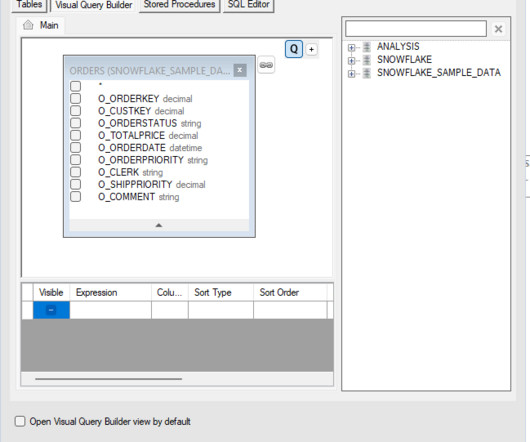

phData

APRIL 21, 2023

Unlike traditional methods that rely on complex SQL queries for orchestration, Matillion Jobs provide a more streamlined approach. By converting SQL scripts into Matillion Jobs , users can take advantage of the platform’s advanced features for job orchestration, scheduling, and sharing. In our case, this table is “orders.”

phData

JULY 10, 2024

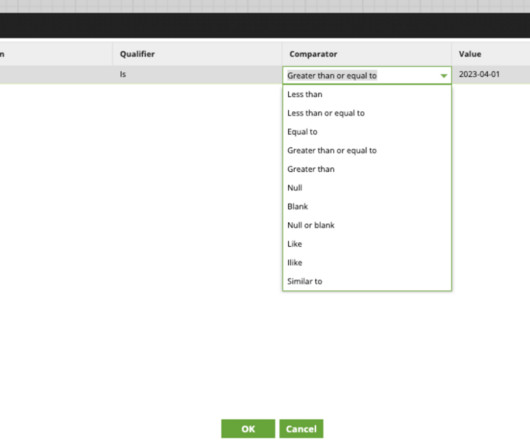

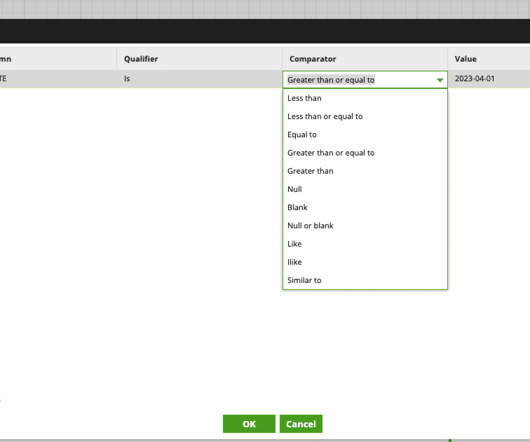

One of Sigma’s key features is its support for custom SQL queries and CSV file uploads. In this blog, we’ll explain why custom SQL and CSVs are important, demonstrate how to use these features in Sigma Computing, and provide some best practices to help you get started.

Tableau

MARCH 30, 2021

we’ve added new connectors to help our customers access more data in Azure than ever before: an Azure SQL Database connector and an Azure Data Lake Storage Gen2 connector. Azure SQL Database. Many customers rely on Azure SQL Database as a managed, cloud-hosted version of SQL Server. Kristin Adderson. March 30, 2021.

Smart Data Collective

APRIL 29, 2020

Extraction, Transform, Load (ETL). Redshift is the product for data warehousing, and Athena provides SQL data analytics. It has useful features, such as an in-browser SQL editor for queries and data analysis, various data connectors for easy data ingestion, and automated data prepossessing and ingestion. Master data management.

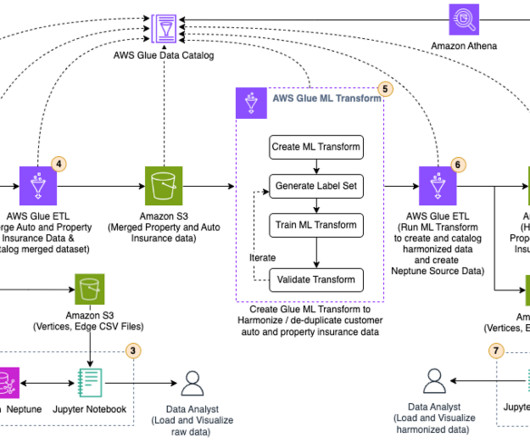

JUNE 26, 2023

Transform raw insurance data into CSV format acceptable to Neptune Bulk Loader , using an AWS Glue extract, transform, and load (ETL) job. Run an AWS Glue ETL job to merge the raw property and auto insurance data into one dataset and catalog the merged dataset. Under Data classification tools, choose Record Matching.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content