Knowledge Distillation: Making AI Models Smaller, Faster & Smarter

Data Science Dojo

JANUARY 30, 2025

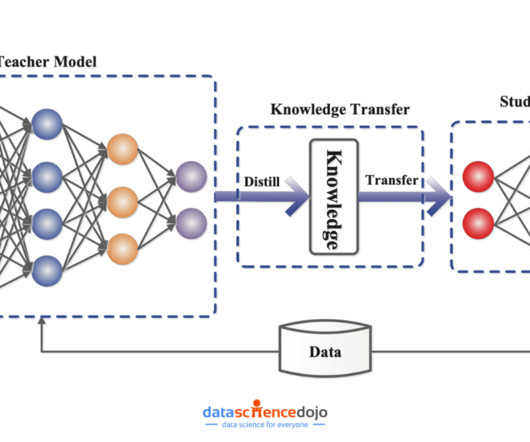

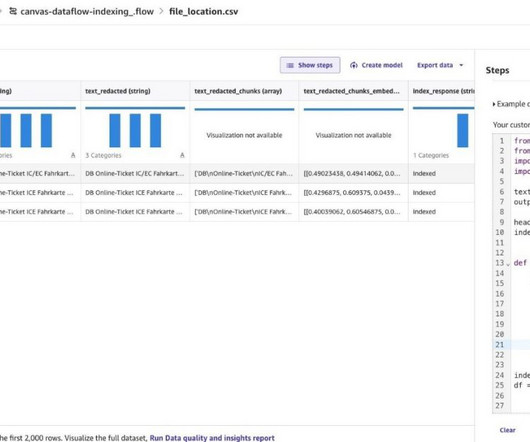

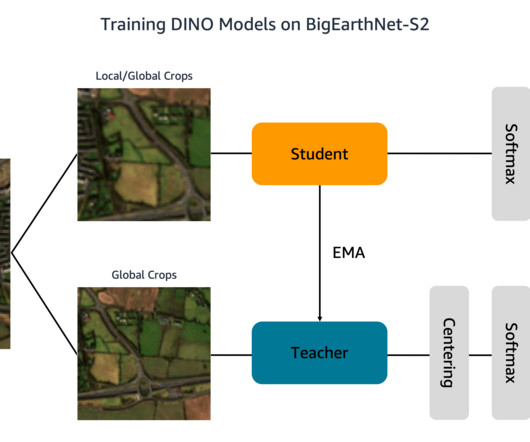

It addresses this issue by enabling a smaller, efficient model to learn from a larger, complex model, maintaining similar performance with reduced size and speed. This blog provides a beginner-friendly explanation of k nowledge distillation , its benefits, real-world applications, challenges, and a step-by-step implementation using Python.

Let's personalize your content